AI and LLMs cannot truly render JavaScript the way browsers do, and this creates real visibility risks for modern websites.

Many sites rely heavily on JavaScript for content, layouts, and data loading. The problem is that AI crawlers and LLMs often see only raw HTML, not what users see after scripts run. This gap affects AI search visibility, AI Overviews, and content reuse in generative answers.

This cluster explains what “JavaScript rendering” really means, how browsers handle it, and why LLM JavaScript rendering works very differently. You’ll learn which crawlers can execute JS, which cannot, and how this impacts indexing, rankings, and AI citations.

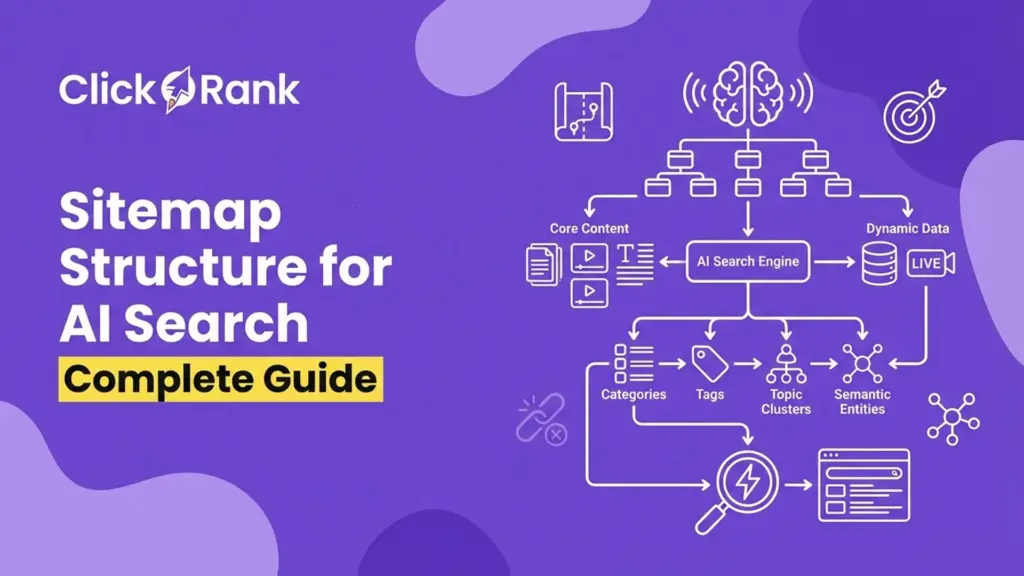

Technical SEO for AI Crawlers & Modern Search Engines and connects with related clusters like Best Sitemap Structure for AI Search and Which Crawlers to Allow or Block. By the end, you’ll know exactly how to make JavaScript sites visible to both search engines and AI systems.

What Does “Rendering JavaScript” Actually Mean?

Rendering JavaScript means executing scripts to generate or modify page content after the initial HTML loads.

When a browser renders JavaScript, it runs client-side code that injects text, links, images, and data into the page. This is common in SPAs and modern frameworks where content is not present in raw HTML.

This matters in 2026 because AI crawlers and LLM JavaScript rendering are not the same as browser rendering. Many AI crawlers fetch HTML but do not fully execute JavaScript. If coo789

HTML parsing reads static markup, while JavaScript execution runs code that changes the page.Parsing HTML is fast and lightweight. Executing JavaScript requires CPU, memory, and time.

Most AI crawlers stop at HTML parsing. They do not build a full DOM or wait for scripts.

Key difference:

- HTML parsing = immediate visibility

- JavaScript execution = conditional visibility

This gap explains why static HTML for AI bots is still critical in modern SEO.

Why rendering matters for content visibility?

Rendering determines whether content is visible to search engines and AI systems.If content exists only after JavaScript runs, AI tools may miss it completely.

This directly impacts rankings, indexing, and AI Overview inclusion. LLMs prefer content that is immediately available in raw HTML.

Rule of thumb:

If “View Source” doesn’t show your content, AI likely can’t see it.

What happens when JavaScript fails to render?

When JavaScript fails, pages may appear empty, incomplete, or broken to crawlers. Important text, links, and schema can disappear from AI visibility entirely.

Why raw HTML is still the foundation of the web?

Raw HTML is universally readable, fast, and reliable.It remains the most trusted format for AI crawlers, indexing systems, and LLM ingestion.

How Traditional Browsers Render JavaScript

Traditional browsers render JavaScript by fully loading, parsing, and executing HTML, CSS, and scripts in a controlled runtime environment.

A browser does much more than fetch files. It builds page structure, applies styles, runs JavaScript, and updates the screen step by step. This full process is why browsers can display dynamic content accurately.

This matters for AI crawlers and JavaScript because most AI systems do not replicate this environment. Browsers are designed for users, not large-scale crawling. They can wait, execute, reflow layouts, and react to events.

Understanding how browsers render explains why LLM JavaScript rendering is limited and why relying only on client-side logic creates visibility gaps for AI search and indexing.

How do browsers process HTML, CSS, and JavaScript?

Browsers process HTML first, then CSS, and finally execute JavaScript in sequence.

HTML is parsed into structure, CSS is applied for styling, and JavaScript runs to modify or extend the page.

JavaScript can block rendering, delay content, or inject new elements. Browsers manage this carefully to keep pages usable.

For AI crawler JS support, this is important because scripts depend on timing and order, something most crawlers avoid for efficiency.

Best practice:

- Deliver meaningful content in HTML

- Let JavaScript enhance, not define, visibility

What role does the DOM play in rendering?

The DOM is the live page structure browsers use to render and update content.JavaScript interacts with the DOM to add text, links, and data dynamically.

Without a fully built DOM, scripts cannot work correctly. Most AI crawlers do not build or maintain a live DOM.

This is why tokens ≠ rendered DOM and why LLMs rely on pre-rendered content instead of live page states.

Why rendering requires a full browser environment?

Rendering JavaScript requires a complete runtime with engines, memory, and event handling.Browsers include JavaScript engines, layout engines, and event loops working together.

AI crawlers avoid this complexity because it is slow and costly at scale.As a result, AI crawler JS support is limited by design, not by capability.

Why scripts need CPU, memory, and event loops?

Scripts must execute logic, wait for events, and update layouts.This requires active processing, not simple text fetching.

Why rendering is expensive at scale?

Running JavaScript for billions of pages consumes massive resources.That cost is why most AI systems prefer static HTML for AI bots.

Can AI and LLMs Render JavaScript?

No, AI models and LLMs do not render JavaScript like browsers do.LLMs are text-based systems. They do not load pages, execute scripts, or wait for client-side code to run. Instead, they consume already-available content that has been fetched, processed, or pre-rendered by other systems.

This distinction is critical for LLM JavaScript rendering and AI crawler JS support. If your content depends on client-side execution, most AI systems will never see it. This directly affects AI search visibility, citations, and inclusion in AI Overviews.

In short, AI reads outcomes, not processes. It relies on static or server-rendered content rather than live JavaScript execution, which is why HTML-first delivery still wins in 2026.

Do LLMs execute JavaScript like browsers?

No, LLMs do not execute JavaScript or behave like headless browsers.They do not have a DOM, event loop, or runtime environment to run scripts.

LLMs receive text inputs that are already extracted from web pages. If JavaScript was required to generate that text, it must have been rendered earlier by another system.

This is why Can AI render JavaScript? is often misunderstood. AI can read results, but it cannot run code.

Practical takeaway:

If JavaScript creates your main content, LLMs will likely miss it entirely.

Why large language models cannot run client-side code?

LLMs cannot run client-side code because they are prediction engines, not execution engines.They generate tokens based on patterns, not live computation.

Running JavaScript requires CPU cycles, memory allocation, and real-time interaction with a page state. LLMs are not built for that.

At scale, executing JS for every page would be slow, costly, and unnecessary for language prediction tasks.This is why static HTML for AI bots remains the safest delivery method.

What content do LLMs actually see?

LLMs see pre-rendered, extracted, or static content not live page states.They rely on data pipelines that pull visible text, headings, and semantic HTML.

If content is hidden behind hydration, infinite scroll, or delayed JS calls, it may never enter those pipelines.

Rule:

If content is present in the initial HTML response, LLMs can see it. If not, visibility is uncertain.

Do LLMs only read static HTML?

Mostly yes. LLMs depend heavily on static or server-rendered HTML output.

Can LLMs interpret JS logic without execution?

They can understand JavaScript syntax as text, but not its runtime output or effects.

Difference Between AI Models and Web Crawlers

AI models and web crawlers serve different roles and work in fundamentally different ways.Search engine crawlers are built to fetch, render, and index web pages at scale. LLMs are built to process text and generate answers. They do not crawl the web live or render pages themselves.

This difference is critical for AI crawlers and JavaScript and LLM JavaScript rendering. Many visibility issues happen because site owners assume AI behaves like Googlebot. It does not.

Understanding this gap helps explain why JavaScript SEO and LLMs require an HTML-first approach. Crawlers collect content; LLMs consume already-collected, structured text.

How are LLMs different from search engine crawlers?

LLMs are content consumers, while search engine crawlers are content collectors. Crawlers fetch URLs, parse HTML, sometimes render JavaScript, and store indexed content.

LLMs do not fetch pages directly. They receive text that has already been extracted or processed.

This means LLMs never decide what to crawl. They can only use what is made available through pre-rendered content, APIs, or indexed data.

SEO implication:

If crawlers cannot reliably access your content, LLMs will not see it either.

Why LLMs are not headless browsers?

LLMs are not headless browsers because they lack execution environments.They have no DOM, no JavaScript engine, and no event loop.

Headless browsers simulate real user environments. LLMs simulate language understanding.

Running a browser for every AI query would be slow, expensive, and unnecessary for text generation.

This is why AI crawler JS support is limited and why dynamic content often fails for AI visibility.

What data format LLMs ingest from websites?

LLMs ingest cleaned, structured text not live HTML or scripts.This includes headings, paragraphs, lists, and semantic signals extracted from pages.

JavaScript files, runtime states, and DOM mutations are not part of this input.

Best practice:

- Use semantic HTML

- Ensure important text exists without JS execution

Why tokens ≠ rendered DOM?

Tokens are language units, not visual page structures.They represent text, not layout or interactive states.

Why LLMs rely on pre-rendered content?

Pre-rendered content is stable, fast to process, and scalable. That makes it ideal for AI systems operating at massive scale.

Which Crawlers Can Render JavaScript And Which Cannot

Only a small number of search engine crawlers can render JavaScript, while most AI crawlers cannot.

This difference is one of the biggest causes of confusion in AI crawlers and JavaScript discussions. Some bots use limited rendering, others fetch raw HTML only, and LLM-focused crawlers usually skip JavaScript entirely.

For LLM JavaScript rendering, this means visibility depends on who is crawling your site. A page that works fine for users may still fail for AI systems if content is created client-side.

Knowing which crawlers execute JS and which do not is essential for JavaScript SEO and LLMs in 2026.

Can Googlebot render JavaScript?

Yes, Googlebot can render JavaScript, but with limits and delays.Googlebot uses a two-wave indexing process. First, it indexes raw HTML. Later, it may render JavaScript using a headless Chromium environment.

However, rendering is not guaranteed. Heavy scripts, blocked resources, or timing issues can cause content to be missed.

Key rule:

Important content should exist in initial HTML, even if Google can render JS.

Can Bingbot and Applebot execute JS?

Bingbot has limited JavaScript rendering, while Applebot has very minimal support. Bingbot can execute some JavaScript but is less reliable than Googlebot.

Applebot mostly relies on static HTML and struggles with complex client-side rendering.This makes static HTML for AI bots even more important if you want cross-engine visibility.

Can GPTBot, ClaudeBot, and PerplexityBot render JS?

No, AI-focused crawlers do not render JavaScript.GPTBot, ClaudeBot, and PerplexityBot primarily fetch raw HTML.

They do not execute scripts, build DOMs, or wait for hydration. If content is missing from initial HTML, these crawlers will not see it.

Why most AI crawlers fetch HTML only?

Fetching HTML is fast, cheap, and scalable. JavaScript execution is slow and resource-heavy, making it impractical for AI ingestion.

How crawler resource limits affect JS execution?

Rendering requires CPU, memory, and time.At scale, crawlers must limit execution which is why HTML-first delivery remains critical.

Rendering vs Indexing Commonly Confused Concepts

Rendering and indexing are separate processes, and confusing them causes major SEO and AI visibility mistakes.

Rendering means turning HTML, CSS, and JavaScript into visible content. Indexing means storing and organizing that content so it can appear in search results or be reused by AI systems. One does not guarantee the other.

This distinction matters more in 2026 because JavaScript SEO and LLMs depend on what is indexed, not what merely renders. A page can render perfectly for users and still fail to enter indexes or AI data pipelines.

For AI crawlers and modern search engines, rendering is optional but indexing is mandatory. Understanding the gap helps explain why “working pages” still lose rankings and AI visibility.

What is the difference between rendering and indexing?

Rendering shows content; indexing stores and evaluates it.Rendering happens when a system processes code to display content. Indexing happens when a search engine decides that content is valuable, accessible, and worth saving.

A page can be rendered but ignored. It can also be indexed without full rendering.

This is why LLM JavaScript rendering assumptions break down. AI systems depend on indexed outputs, not live rendering states.

Key insight:

If content is not indexed, it does not exist for search or AI reuse.

Can Google index content without rendering?

Yes, Google can index content without rendering JavaScript. Google often indexes raw HTML during the first crawl. If meaningful text exists in the initial response, it can rank without JavaScript execution.

Rendering may happen later, or not at all.This is why server-rendered and static content often outperforms JS-heavy pages, even when both look identical to users.

Why rendered content may still not be indexed?

Rendered content can be excluded due to quality, timing, or accessibility issues.

Delayed rendering, blocked resources, weak internal linking, or low perceived value can all prevent indexing.

Even if JavaScript runs, search engines may skip saving that content.For AI search visibility, this is critical: LLMs only see what makes it through indexing layers, not what temporarily renders.

How delayed rendering impacts SEO visibility?

Delayed rendering can cause content to be evaluated too late or ignored entirely.This often leads to partial indexing or missing sections.

Why LLMs never perform indexing themselves?

LLMs do not crawl or store the web.They rely on indexed, processed content provided by other systems.

Why JavaScript Content Often Fails for AI Visibility

JavaScript content often fails for AI visibility because most AI systems cannot execute client-side code.

AI crawlers and LLMs usually fetch raw HTML and stop there. If important text, links, or entities appear only after JavaScript runs, that content is effectively invisible to AI. This is one of the biggest causes of lost AI search visibility in modern sites.

In 2026, this problem is amplified by SPAs, hydration-heavy frameworks, and dynamic imports. These patterns work for users but break JavaScript SEO and LLMs. AI systems prefer immediate, stable content that exists without execution delays.

The result is simple but painful: pages look “fine” in browsers, yet AI tools miss key information entirely.

Why AI systems miss client-side rendered content?

AI systems miss client-side rendered content because they do not wait for JavaScript execution.

Most AI crawlers fetch HTML, extract visible text, and move on. They do not trigger events, wait for API calls, or run hydration steps.

If your headings, body text, or internal links are injected after load, AI crawlers never capture them. This directly hurts AI crawler JS support and downstream LLM usage.

Best practice:

- Put core content in the initial HTML

- Treat JavaScript as enhancement, not delivery

How SPAs hide text from crawlers?

SPAs hide text by serving empty or minimal HTML shells.The real content loads only after JavaScript fetches data and updates the DOM.

For AI crawlers, this looks like a blank page. No text means nothing to index, summarize, or cite.

This is why many SPAs struggle with AI Overview indexing signals, even when rankings seem stable.

Why hydration does not help AI crawlers?

Hydration does not help because AI crawlers do not wait for it.Hydration reconnects JavaScript logic to server-rendered markup, but only if a system stays long enough.

AI crawlers typically do not. They capture content before hydration completes.This makes hydration unreliable for LLM JavaScript rendering visibility.

What happens with infinite scroll and JS tabs?

Content hidden behind scroll events or tabs often never loads for crawlers.AI systems see only the first screen of static HTML.

Why dynamic imports break content discovery?

Dynamic imports delay content loading until conditions are met.AI crawlers never trigger those conditions, so content stays hidden.

Structured Data & JavaScript A Critical Blind Spot

Structured data injected via JavaScript is often invisible to AI crawlers and LLMs.

Many sites rely on client-side JSON-LD to add schema after page load. While this may work for users and sometimes for search engines, it frequently fails for AI crawlers and JavaScript workflows. If schema is not present in the initial HTML, most AI systems never see it.

This blind spot directly impacts AI citations, summaries, and AI Overview inclusion. LLMs depend on clean, pre-rendered signals to understand entities, relationships, and facts. When schema is delayed or injected dynamically, those signals disappear.

In 2026, JavaScript SEO and LLMs demand server-visible structured data, not best-effort client-side delivery.

Can AI read JSON-LD injected via JavaScript?

No, AI systems usually cannot read JSON-LD injected after JavaScript execution.AI crawlers typically fetch raw HTML and extract visible text and markup immediately. They do not wait for scripts to run or schema to be injected.

If JSON-LD is added after load, it misses AI ingestion pipelines completely.

Best practice:

- Place critical schema directly in the initial HTML

- Avoid relying on client-side injection for entity markup

Why client-side schema often goes unseen?

Client-side schema goes unseen because execution never happens.

Most AI crawlers do not execute JavaScript, build DOMs, or monitor script changes.

This means schema that looks “present” in DevTools may not exist from an AI crawler’s perspective.This is one of the most common AI crawler JS support failures on modern sites.

How schema affects AI citations and summaries?

Schema helps AI systems identify entities, attributes, and relationships. When available, it improves accuracy in summaries, fact extraction, and source attribution.

Without visible schema, AI relies only on plain text, which weakens citation confidence and reduces reuse chances.

For LLM JavaScript rendering, schema must be static to matter.

When does Google see JS-generated schema?

Google may see JS-generated schema if rendering succeeds and resources are available. However, this is delayed and unreliable.

Why AI crawlers usually never see schema markup?

AI crawlers prioritize speed and scale. Executing JavaScript just to find schema is inefficient, so they skip it entirely.

Real SEO Impact of JS Rendering Limitations

JavaScript rendering limitations directly hurt crawling, indexing, and AI visibility even when pages look perfect to users.Modern sites often “work” in browsers but fail for crawlers and AI systems because critical content depends on client-side execution. When bots cannot reliably access text, links, or schema, rankings weaken and AI reuse drops.

In 2026, this gap is more damaging due to AI crawlers and JavaScript constraints and the rise of AI Overviews. JavaScript SEO and LLMs favor content that appears instantly in raw HTML. If discovery is delayed or incomplete, pages lose trust, coverage, and citations.

The real impact shows up as slower indexing, unstable rankings, and missing AI summaries despite no visible UX issues.

How JavaScript affects crawling and indexing?

JavaScript affects crawling and indexing by delaying or hiding content from bots. Crawlers first fetch HTML. If meaningful content isn’t present, they may index little or nothing before moving on.

Rendering queues, blocked resources, or script errors further reduce coverage. This limits AI crawler JS support and downstream LLM usage.

Best practice:

- Ensure primary content exists in the initial response

- Use JS to enhance, not reveal, core text

Why rankings drop despite “working pages”?

Rankings drop because crawlers evaluate what they can access, not what users see.If content appears only after JavaScript runs, it may be missed or partially indexed.

This creates thin signals, weak relevance, and lower trust especially for competitive queries and AI Overviews.

How JS delays content discovery?

JavaScript delays discovery by requiring execution, timing, and resources.Delayed APIs, hydration, and lazy loading push content beyond crawler attention windows.

Early discovery matters for freshness, internal linking signals, and AI extraction pipelines.

Why important text must exist in initial HTML?

Initial HTML guarantees immediate visibility to crawlers and AI systems.If it’s not there, discovery is uncertain.

Why lazy loading hurts AI comprehension?

Lazy loading hides text and images until events fire.AI crawlers rarely trigger those events, so content stays invisible.

AEO Impact How AI Overviews Consume Content

AI Overviews consume content by extracting clear, static signals from pages not by executing JavaScript.AI Overviews summarize answers using content that is easy to fetch, parse, and trust. That means visible text, headings, lists, and semantic HTML available at first load. Client-side rendering introduces delays and uncertainty, which reduces selection for summaries.

This matters for JavaScript SEO and LLMs because AEO depends on what AI can confidently read at scale. Pages that rely on hydration, tabs, or lazy-loaded text often lose out even if rankings look fine. In 2026, HTML-first delivery is the fastest path to consistent AI Overview inclusion and citations.

How AI Overviews extract website information?

AI Overviews extract information from indexed, pre-rendered content.They pull concise facts, definitions, steps, and entities from pages that expose meaning immediately.

AI systems favor pages where:

- Headings describe intent clearly

- Paragraphs answer questions directly

- Lists summarize steps or comparisons

If content requires JS execution to appear, it’s often skipped. This is why AI crawler JS support limitations directly affect AEO outcomes.

Why semantic HTML matters for LLMs?

Semantic HTML helps LLMs understand meaning without guessing.Tags like <h1–h3>, <p>, <ul>, and <article> signal structure and importance.

LLMs use these cues to extract answers accurately. Div-heavy layouts with JS-injected text reduce clarity and confidence.

Best practice:

- Use proper headings and lists

- Keep primary content readable without scripts

Why visible text beats hidden JS content?

Visible text wins because it is reliable and immediate.Hidden or delayed JS content may never be seen by AI pipelines.

For LLM JavaScript rendering, certainty beats sophistication. Clear text beats clever apps.

How entities are derived from static content?

Entities are extracted from names, attributes, and relationships in visible text.Static HTML makes entity recognition accurate and repeatable.

Why AI prefers clean content blocks?

Clean blocks reduce noise and ambiguity.They make summarization faster, safer, and more trustworthy.

Solutions: How to Make JavaScript Sites AI-Friendly

JavaScript sites become AI-friendly when critical content is available without client-side execution.The goal is simple: ensure AI crawlers and LLMs can access meaningful text, links, and schema directly from HTML. This removes uncertainty caused by AI crawlers and JavaScript limitations and improves indexing, rankings, and AI Overview inclusion.

In 2026, JavaScript SEO and LLMs reward predictability. Techniques like SSR, SSG, and prerendering shift content generation from the browser to the server or build process. This guarantees that both users and AI systems see the same core content immediately.

Choosing the right approach depends on how dynamic your site is, how often content changes, and how important AI search visibility is to your growth.

What is server-side rendering (SSR)?

Server-side rendering means generating full HTML on the server for every request. Instead of waiting for JavaScript to run in the browser, the server sends a complete page with content already visible.

This works well for dynamic pages where data changes frequently. It ensures LLM JavaScript rendering issues are eliminated because content exists before crawling.

Benefits:

- Immediate AI visibility

- Faster indexing

- Strong AEO performance

What is static site generation (SSG)?

Static site generation builds HTML pages at compile time.Content is generated once and served instantly to users and crawlers.

SSG is ideal for blogs, documentation, and landing pages. It produces clean, lightweight HTML that AI crawlers consistently understand.

For static HTML for AI bots, SSG is often the safest and fastest option.

What is prerendering and dynamic rendering?

Prerendering creates static snapshots of pages for crawlers.Dynamic rendering serves different versions based on the requester.

These methods help when full SSR is not possible, especially for SPAs.

They reduce AI crawler JS support risks by exposing readable content without rewriting the entire app.

When should SSR be used?

Use SSR for frequently updated, data-driven pages where freshness matters. It balances flexibility and AI visibility.

When is prerendering the best solution?

Prerendering works best for large SPAs with stable content. It’s a practical fix when rebuilding for SSR or SSG isn’t feasible.

Framework-Specific Best Practices

Modern frameworks can be AI-friendly, but only when their rendering modes are configured correctly.

Next.js, Nuxt, and React all support patterns that solve AI crawlers and JavaScript limitations. The problem is not the framework it’s the default setup. Many teams ship client-heavy builds that hide content from AI systems.

For JavaScript SEO and LLMs, frameworks must output meaningful HTML before hydration. This ensures stable indexing, stronger rankings, and better AI Overview visibility. In 2026, choosing the right rendering mode matters more than the framework itself.

How Next.js handles JavaScript rendering?

Next.js supports multiple rendering strategies that work well for AI visibility.

It allows Server-Side Rendering (SSR), Static Site Generation (SSG), and incremental builds.

Using getStaticProps or server components ensures content exists in HTML. This removes LLM JavaScript rendering risk.

Best practices:

- Prefer SSG for content pages

- Use SSR for frequently updated data

- Avoid client-only pages for SEO-critical routes

How Nuxt improves AI crawlability?

Nuxt improves crawlability through built-in SSR and static generation.It outputs clean HTML by default when configured properly.

This makes Nuxt a strong choice for static HTML for AI bots, especially for content-heavy sites.

Tip:

Disable unnecessary client-only plugins on SEO pages.

How React SPAs should be optimized?

React SPAs must avoid client-only rendering for important content.Pure SPAs often serve empty HTML shells.

To fix this:

- Add SSR with frameworks like Next.js

- Use prerendering for key routes

- Ensure headings and text exist before hydration

This directly improves AI crawler JS support.

Which rendering mode is best for SEO & AEO?

SSG is best for stable content.SSR is best for dynamic pages.Client-only rendering should be avoided.

How to test rendered vs raw HTML?

Use “View Source” to check raw HTML.If content isn’t there, AI likely can’t see it.

Testing Whether AI Can See Your Content

You can only confirm AI visibility by testing what exists in raw HTML before JavaScript runs.Many site owners assume content is visible because it appears in the browser. That assumption breaks JavaScript SEO and LLMs. AI crawlers and LLM pipelines usually read the initial HTML response, not the hydrated page.

In 2026, testing visibility means separating what users see from what bots actually receive. If critical text, links, or schema are missing from raw HTML, AI systems will likely miss them. Proper testing prevents indexing gaps, ranking drops, and missing AI Overview citations.

This section shows how to verify AI crawler JS support using simple, reliable checks.

How to check raw HTML output?

Check raw HTML by viewing the page source, not the rendered DOM.Right-click the page and choose “View Page Source.” This shows exactly what crawlers receive on first fetch.

Look for:

- Main headings and body text

- Internal links

- Structured data

If content is missing here, LLM JavaScript rendering will fail regardless of how good the UI looks.

Rule:

If it’s not in raw HTML, assume AI cannot see it.

How to test Google rendering properly?

Use Google’s rendering tools to compare raw vs rendered output.Google Search Console offers URL Inspection, which shows rendered HTML and indexing status.

Compare:

- Raw HTML

- Rendered HTML

- Indexed content

If Google renders content late or partially, AI visibility is still at risk even if rankings appear stable.

How to simulate AI crawler access?

Simulate AI crawlers by disabling JavaScript and testing server responses.Tools and browser settings can load pages without JS. This mimics how many AI crawlers fetch content and reveals hidden dependencies.

Best practice:

Your core content should remain readable with JavaScript fully disabled.

What “View Source” reveals vs DevTools?

View Source shows initial HTML. DevTools shows post-JS DOM. AI systems care about the first not the second.

Which tools expose JS visibility issues?

Rendering testers, crawl simulators, and HTML fetch tools quickly expose gaps. They reveal where JavaScript blocks AI search visibility.

Common Myths About LLMs and JavaScript

Many assumptions about LLMs and JavaScript are incorrect and lead to serious visibility mistakes.

A common belief is that AI can “figure out” JavaScript or behave like a browser. In reality, LLM JavaScript rendering is extremely limited. These myths cause teams to overtrust client-side code and underdeliver content to AI systems.

In 2026, JavaScript SEO and LLMs require precision, not hope. AI systems reward content that is explicit, visible, and static. Clearing up these myths helps prevent lost indexing, missing AI Overviews, and weak citations.

Can AI “understand” JavaScript code itself?

AI can read JavaScript as text but cannot execute or validate its output.LLMs recognize syntax and logic patterns, but they do not run the code or see its results.

Understanding code is not the same as seeing rendered content. If JavaScript generates text at runtime, AI will not access it.

Key takeaway:

Readable code ≠ visible content.

Do LLM plugins or browsing tools render JS?

No, LLM browsing tools do not reliably render JavaScript. Even when tools fetch live pages, they prioritize speed and safety over full execution.They often extract raw HTML or simplified text, skipping hydration and dynamic updates.

This means AI crawler JS support remains limited, even with “browsing” features enabled.

Does AI training include live website rendering?

No, AI training does not involve live rendering of websites.Training data is collected, processed, and stored ahead of time.It does not involve executing JavaScript per site or preserving runtime behavior.

This is why relying on training assumptions breaks AI search visibility strategies.

Why training data ≠ real-time crawling?

Training uses snapshots, not live page execution. It reflects past content, not current JS output.

Why execution is different from prediction?

Execution runs code.Prediction generates text.LLMs only do the latter.

Future Outlook: Will AI Ever Render JavaScript?

AI is unlikely to fully render JavaScript at scale, even in the future.

While AI systems will improve at extracting and understanding content, LLM JavaScript rendering will remain limited by cost, speed, and scale. Executing JavaScript like a browser for billions of pages is expensive and slow, which conflicts with how AI systems operate efficiently.

In 2026 and beyond, AI crawlers and JavaScript will continue to follow a selective model. Some systems may use limited rendering for high-value pages, but most AI pipelines will still depend on pre-rendered, static content. This makes HTML-first SEO a long-term strategy, not a temporary workaround.

Will LLMs become browser-based?

No, LLMs are not expected to become full browser-based systems.LLMs are designed for language prediction, not page rendering. Turning them into browsers would add massive overhead without improving answer quality.

Instead, LLMs will rely on external systems to fetch and preprocess content. This keeps JavaScript SEO and LLMs separated by design.

Will headless browsers integrate with AI?

Limited integration is possible, but not at full web scale.Some AI tools may use headless browsers for selective tasks, audits, or trusted sources.

However, running headless browsers for continuous crawling is too resource-heavy. This limits AI crawler JS support to edge cases only.

How might AI crawling evolve?

AI crawling will evolve toward smarter filtering, not deeper execution.

Future systems will prioritize:

- Cleaner HTML

- Strong semantic structure

- Trusted domains

This reduces the need for JavaScript execution while improving extraction accuracy.

Why cost and scale limit JS rendering?

JavaScript execution requires CPU, memory, and time.At internet scale, this becomes unsustainable.

Why HTML-first will remain dominant?

HTML is fast, predictable, and universally readable.That reliability makes it the preferred format for AI systems.

Final Best Practices Checklist

Websites succeed in AI search when critical content is accessible without JavaScript execution.

This checklist summarizes what must be visible, predictable, and crawlable for AI crawlers and JavaScript workflows. In 2026, JavaScript SEO and LLMs reward sites that remove uncertainty and expose meaning immediately.

Think of this as a safety net. Even if browsers render everything perfectly, AI systems only trust what they can access quickly and consistently. Following these best practices protects rankings, indexing, and AI Overview inclusion regardless of crawler limitations.

What content must be server-rendered?

Core content must always be server-rendered or statically generated.This includes headings, body text, internal links, and primary entities.

If this content appears only after client-side execution, LLM JavaScript rendering fails.

Server-render these elements:

- Page titles and H1–H3 headings

- Main paragraphs and lists

- Navigation and contextual links

Which elements should never rely on JS?

Important SEO elements should never depend on JavaScript alone.Client-side delivery introduces delays and visibility risk.

Avoid JS-only rendering for:

- Critical text

- Structured data

- Internal linking

These elements directly affect AI crawler JS support and indexing confidence.

How to prepare websites for AI search in 2026?

Prepare for AI search by prioritizing HTML-first delivery and semantic structure.Design pages for crawlers first, then enhance for users.This approach aligns with how AI systems extract, trust, and reuse content.

AI-friendly technical SEO checklist

- Content visible in raw HTML

- Semantic headings and lists

- Static or server-rendered schema

- Crawlable internal links

JavaScript SEO do’s and don’ts

Do: use JS for interaction and UX

Don’t: use JS to reveal core meaning

Key Takeaways

AI visibility improves when teams accept that JavaScript execution is not guaranteed for AI systems.

This final section distills what matters most for LLM JavaScript rendering, AI crawlers and JavaScript, and long-term AI search visibility. In 2026, success is not about clever frameworks it’s about predictable delivery.

AI systems reward content that is fast to fetch, easy to parse, and immediately meaningful. Pages that depend on client-side logic introduce uncertainty, delays, and missed signals. An HTML-first mindset removes that risk and aligns SEO, AEO, and AI Overview performance under one strategy.

Can AI & LLMs render JavaScript?

No AI and LLMs do not render JavaScript like browsers do.They do not execute client-side code, wait for hydration, or build live DOMs. They consume pre-rendered, indexed text.

If content is missing from initial HTML, AI systems will likely miss it. This is the core limitation behind JavaScript SEO and LLMs.

What should SEOs and developers do now?

Shift critical content delivery to the server or build step.SEOs should audit raw HTML. Developers should choose SSR, SSG, or prerendering for important pages.

This alignment removes guesswork and stabilizes AI search visibility.

Why HTML-first SEO wins in AI search?

HTML-first SEO wins because it is reliable, scalable, and AI-readable.Static, semantic content is easier to index, summarize, and cite.

That reliability is why HTML-first will continue to dominate AI search.

Can AI crawlers render JavaScript like a browser?

Most AI crawlers, including ChatGPT’s bots and similar LLM-based crawlers, do not execute or render JavaScript. They fetch the static HTML that the server returns but do not run client-side scripts to generate dynamic content. This means any content loaded only after JavaScript runs may not be seen by these crawlers.

Why can’t most LLMs render JavaScript content?

LLMs are designed for language prediction, not browser-style execution. Unlike full browsers that interpret and run JavaScript code to build the final DOM, most AI systems don’t emulate that environment so dynamically injected text or UI elements often remain invisible.

Which crawlers can render JavaScript?

Google’s crawler infrastructure (e.g., Googlebot and its related systems like Gemini via Googlebot) can render JavaScript fully using a headless browser. However, most LLM-based crawlers including those from OpenAI, Anthropic, Perplexity, and others cannot execute JavaScript and only see the initial HTML.

What content do AI/LLM crawlers see if they can’t run JavaScript?

AI crawlers that don’t render JavaScript mostly see the raw HTML response returned by the server. Content that appears only after scripts run such as product details, dynamic text, or interactive elements may not be visible to them, which can affect discovery and citation in AI-generated answers.

How does lack of JavaScript rendering affect AI visibility?

Because most AI crawlers don’t execute JavaScript, dynamically generated content may not be indexed or used when generating answers. This can lead to reduced visibility in AI search results, summaries, and answer models unless the content is rendered server-side or pre-rendered in the HTML.

Can server-side rendering or pre-rendering help AI bots see JavaScript content?

Yes techniques like server-side rendering (SSR), static site generation (SSG), and prerendering ensure that content appears in the static HTML delivered by the server. This makes important page content visible to AI crawlers that cannot run JavaScript, improving discoverability and potential citation.