In 2026, Managing Multi-Site SEO Portfolios is no longer a human-scale problem; it is an algorithmic one. Enterprises operating dozens or hundreds of domains whether through acquisitions, regional expansion, or franchise models face a fragmented landscape where manual oversight is impossible. The complexity of modern search means a technical error on one sub-domain can trigger a cascading loss of authority across your entire network.

To survive, enterprises must pivot to AI-driven centralization. This guide, a key part of our comprehensive guide on Scaling Content Production with AI Automation, explores how to leverage “Mission Control” technology to govern global standards while allowing for local nuance. By automating technical audits and preventing keyword cannibalization at scale, AI turns a chaotic portfolio of websites into a synchronized revenue engine.

The Complexity of Multi-Site SEO for Enterprises

Managing SEO for a single site is difficult; managing it for a portfolio of 50+ sites multiplies the complexity exponentially. The sheer volume of data points, millions of keywords, backlinks, and technical elements, overwhelms traditional teams. Without AI, maintaining a unified strategy across disparate brands and regions becomes a logistical nightmare, often resulting in diluted domain authority and wasted budget.

Why is managing SEO across multiple sites challenging for enterprises?

Managing multiple sites is challenging because it fractures visibility and resource allocation. Enterprise SEO teams struggle to maintain technical standards across legacy platforms, different CMS architectures, and varying levels of local team expertise.This fragmentation leads to “SEO drift,” where individual sites diverge from best practices, creating vulnerabilities that competitors exploit.

Furthermore, the administrative burden of logging into fifty different Google Search Console properties or analytics accounts creates data blindness. Executives lack a unified view of performance, making it impossible to spot systemic issues like a global drop in Core Web Vitals or a widespread indexing error. In 2026, the speed of search updates requires instant, portfolio-wide reaction times that manual coordination cannot achieve. Without a centralized AI layer to aggregate and analyze this data, enterprises are constantly reactive, putting out fires rather than driving strategic growth.

How do multiple domains, language,s and regions increase SEO complexity?

Global portfolios introduce the “Localization Paradox”: content must be consistent with the brand but unique to the culture. Managing Hreflang Tags across 20 languages and 50 regions is mathematically complex. A single misconfiguration can cause Google to de-index a regional site or serve the wrong language to users, destroying local conversion rates.

Beyond technical tags, search intent varies by region. A keyword that is transactional in the US might be informational in Germany. Managing these nuances manually requires an army of local SEOs. With multiple domains, you also face the challenge of “Domain Reputation.” If one acquired site has a toxic link profile, it can bleed credibility to other linked properties. AI is essential for monitoring these cross-border relationships and ensuring that the global mesh of sites supports, rather than sabotages, the collective authority.

What are the risks of inconsistent SEO practices across sites?

Inconsistency dilutes brand authority and confuses search algorithms. If one regional site uses high-quality, E-E-A-T optimized content while another uses thin, spun text, Google may flag the entire brand entity as untrustworthy. Inconsistent schema markup or site architecture also prevents the brand from dominating Rich Snippets globally.

The risk extends to user experience (UX). If a user navigates from your UK site to your French site and encounters a completely different structure or slower load times, trust erodes. In 2026, Google measures brand signals holistically. Inconsistent practices signal operational weakness. Moreover, without standardized reporting, it is impossible to benchmark performance. You cannot know if the Asian market is underperforming because of market conditions or because the local team is ignoring SEO protocols. Consistency is the foundation of scalable growth.

The Danger of Internal Competition and Keyword Cannibalization Across Domains

Keyword Cannibalization occurs when multiple sites in your portfolio compete for the same search terms, splitting link equity and confusing Google. This self-sabotage often results in none of your sites ranking #1, as they effectively knock each other out of the top spots, allowing a competitor to take the lead.

For enterprises with overlapping product lines or distinct brands targeting similar demographics, this is a critical threat. Without centralized AI oversight, Brand A might launch a campaign targeting “Enterprise Cloud Storage” without realizing Brand B is already ranking for it. AI tools can map the entire portfolio’s keyword footprint, identifying these conflicts instantly. They enable a strategy of “Swim Lanes,” where specific domains are assigned exclusive ownership of specific topic clusters, ensuring that the portfolio occupies positions #1, #2, and #3, rather than fighting for #5.

How AI Can Simplify Multi-Site SEO Management

AI simplifies management by acting as a unified intelligence layer that sits above individual domains. It ingests data from every site in the portfolio, normalizing it into a single dashboard. This allows for “Management by Exception”, where the AI highlights only the critical issues that need human attention, filtering out the noise of daily fluctuations.

How can AI automate SEO monitoring across multiple domains?

AI automates monitoring by continuously crawling every site in the portfolio 24/7, detecting anomalies like 404 spikes, schema errors, or Content Decay in real-time. It replaces the need for monthly manual audits with a live “Health Score” for the entire network, triggering alerts immediately when a threshold is breached.

In 2026, waiting for a monthly audit is too slow. If a deployment on Friday breaks the checkout page on your Australian site, you need to know instantly, not on Monday. AI monitoring tools integrated with ClickRank provide this vigilance. They don’t just report errors; they prioritize them based on revenue impact. An error on a high-traffic product page triggers a “Critical Alert,” while a broken link on an old blog post is queued for later. This automated triage ensures that limited engineering resources are always focused on the most financially significant problems.

Which SEO tasks are best suited for AI automation at scale?

Tasks that are repetitive, rule-based, and data-heavy are perfect for automation. This includes Meta Tag Optimization, Image Alt Text Generation, Internal Link Building, and Schema Markup Injection. AI can execute these tasks across thousands of pages instantly, ensuring 100% compliance with SEO best practices without human drudgery.

For a multi-site portfolio, the ability to propagate a change globally is a superpower. If Google introduces a new “Merchant Listing” schema, AI can identify every eligible product page across 50 domains and inject the code automatically. Manually, this would take months. AI also excels at “Log File Analysis” at scale, identifying how Googlebot is crawling the network and spotting inefficiencies like crawl traps that are wasting the portfolio’s aggregate crawl budget.

How ClickRank identifies and fixes technical SEO issues across all sites simultaneously

ClickRank uses a centralized “Command Center” architecture to audit all connected domains at once. It identifies systemic issues, like a slow JavaScript framework affecting 10 sites, and offers Click SEO Fixes that can be deployed across the entire group, ensuring rapid remediation of technical debt.

This capability transforms technical SEO from a game of “Whack-a-Mole” into a strategic operation. Instead of fixing the same missing H1 tag on 50 different sites one by one, ClickRank allows the central team to apply a fix template globally. It also monitors the “Health Velocity” of the portfolio, showing which sites are improving and which are degrading. By integrating directly with the CMS of each site, ClickRank bypasses the need for developer tickets for common issues, allowing the SEO team to maintain technical hygiene autonomously and instantaneously.

How can AI maintain content consistency across multiple websites?

Content consistency is the bedrock of brand identity. AI ensures that every piece of content, regardless of which domain it lives on, adheres to the brand’s voice, quality standards, and strategic goals. It acts as a global editor-in-chief, enforcing guidelines that human editors might miss.

How does AI help enforce brand voice and messaging standards globally?

AI-powered editorial tools analyze content against a “Brand Knowledge Graph” and style guide. They flag deviations in tone, terminology, or legal compliance before publication. This ensures that a blog post written by a freelancer in Singapore sounds just as authoritative and “on-brand” as one written by the HQ team in New York.

In 2026, maintaining a consistent “Brand Entity” is crucial for E-E-A-T. If your sites contradict each other, for example, one claiming a product is “AI-powered” and another saying it is “Algorithm-based”, Google’s confidence in your entity drops. AI enforcement tools prevent this semantic drift. They can also auto-correct outdated product names or non-compliant claims across the entire archive of content. If a product is rebranded, the AI can find and update every mention across 10,000 pages in minutes, preserving the integrity of the brand’s messaging globally.

How can AI ensure consistent keyword targeting without cross-domain conflict?

AI manages a centralized “Keyword Ledger” for the entire portfolio. When a local team plans a new piece of content, the AI checks the topic against the ledger. If another site in the portfolio already owns that topic, the system flags a “Cannibalization Risk” and suggests a different angle or a cross-domain link instead.

This proactive governance is essential. Instead of unknowingly competing, teams are guided to collaborate. The AI might suggest, “The UK site ranks #1 for this topic; consider localizing their content rather than writing from scratch.” This efficiency prevents wasted effort and strengthens the portfolio’s overall authority. By assigning “Topic Ownership” to specific domains based on their existing authority and relevance, AI ensures that the enterprise occupies the maximum amount of SERP real estate without infighting.

How can AI assist with multi-region and multi-language SEO?

Scaling SEO globally requires navigating a maze of languages and search behaviors. AI streamlines localization, moving beyond simple translation to “Transcreation”, adapting content to fit the cultural and search intent context of each target market.

How can AI optimize hreflang tags and localization strategies automatically?

AI automates the generation and auditing of XML sitemaps and Hreflang Tags. It checks for common errors like “Return Tag Missing” or conflicting country codes across millions of URLs. ClickRank can dynamically inject the correct tags, ensuring that users in Mexico see the es-mx version while users in Spain see es-es.

Hreflang is notoriously fragile; a single broken link can invalidate a cluster. AI monitoring ensures these tags remain valid as pages are added or removed. Beyond tags, AI analyzes the “Localization Fit” of content. It can flag if a US-centric article is being served to a Japanese audience without necessary cultural adaptations (like currency or units of measure). This ensures that the technical signals (tags) match the on-page experience (content), which is the key to ranking in international markets.

How can AI track regional search trends for hyper-local content targeting?

AI monitors search trends at a granular, postal-code level across the globe. It identifies “Hyper-Local” opportunities, like a sudden spike in interest for a specific product in Mumbai, that a global marketing team would miss. This allows regional teams to deploy targeted content that captures emerging local demand.

In 2026, “Global” is just a collection of many “Locals.” AI tools scrape local SERPs to understand the specific intent nuances. For example, “football boots” in the UK requires different semantics than “soccer cleats” in the US. AI identifies these Semantic Entities and prompts local writers to include them. It enables a “Glocal” strategy where the overarching brand message is global, but the keyword targeting and content examples are surgically adapted to the local user’s reality.

Streamlining SEO Operations with AI

Operational efficiency is the competitive advantage of the future. AI removes the friction of managing a large portfolio, allowing SEO teams to focus on strategy and growth rather than data entry and reporting.

How can AI simplify site audits and performance monitoring for 100+ URLs?

AI simplifies audits by running them in parallel. Instead of auditing one site at a time, ClickRank crawls the entire portfolio simultaneously, aggregating the data into a “Global Health Dashboard.” This highlights systemic patterns, allowing the central team to push one fix that solves problems across 100+ domains instantly.

This parallel processing power is vital. Manual audits of 100 sites would take a quarter; AI does it in a day. It also allows for “Differential Auditing”, comparing the performance of Site A vs. Site B to identify best practices. If the German site has significantly better Core Web Vitals than the French site, the AI highlights the specific configuration difference, allowing the French team to replicate the success. It turns the portfolio into a learning laboratory where insights from one domain elevate the others.

How can AI track competitor SEO performance across multiple global markets?

AI tracks competitors on a global scale, monitoring their rankings, content velocity, and backlink profiles across every target region. It identifies when a competitor launches a new localized site or captures market share in a specific country, sending alerts to the relevant regional manager for immediate counter-action.

Competitor landscapes vary by region. Your rival in Brazil might be different from your rival in Canada. AI builds a “Competitor Matrix” for each domain in the portfolio. It reveals gaps where the enterprise is under-indexed relative to local players. For instance, it might show that while you dominate in English, local competitors are winning on Spanish long-tail keywords. This insight drives specific resource allocation to close regional gaps and defend market share globally.

How ClickRank automate executive reporting to save time for enterprise teams?

ClickRank automates reporting by pulling live data into customizable, role-based dashboards. It generates “Executive Summaries” using Natural Language Generation (NLG) to explain performance trends in plain English (“Revenue up 10% due to improved rankings in EMEA”), saving hours of manual slide deck creation.

Enterprise reporting is often a bottleneck. SEO managers spend the first week of every month copy-pasting charts. ClickRank eliminates this. It sends automated reports to stakeholders, CTOs get technical health reports, CMOs get revenue attribution reports, and Country Managers get regional performance reports. This ensures everyone has the data they need to make decisions without burdening the central SEO team. It standardizes the metrics used across the organization, creating a single version of the truth.

Measuring Success Across Multi-Site SEO Portfolios

Measuring success in a multi-site environment requires a shift from “site-level” metrics to “portfolio-level” economics. AI enables this by normalizing data and attributing value based on contribution to the total enterprise bottom line.

Which KPIs should enterprises track to measure portfolio-wide SEO performance?

Enterprises should track Aggregate Organic Revenue, Global Market Share, Portfolio Health Score, and Cross-Domain Cannibalization Rate. These metrics provide a holistic view of the network’s value, moving beyond individual site traffic to measure the collective efficiency and financial impact of the digital ecosystem.

Tracking “Global Market Share” is particularly powerful. It answers the question: “Of all the searches for our category worldwide, what % do we capture?” This aligns SEO with broader business goals. The “Cannibalization Rate” metric is a unique efficiency KPI; lowering it means the portfolio is working more cooperatively. By focusing on these high-level metrics, leadership can evaluate the SEO program as a strategic asset class, making investment decisions based on portfolio theory rather than isolated site performance.

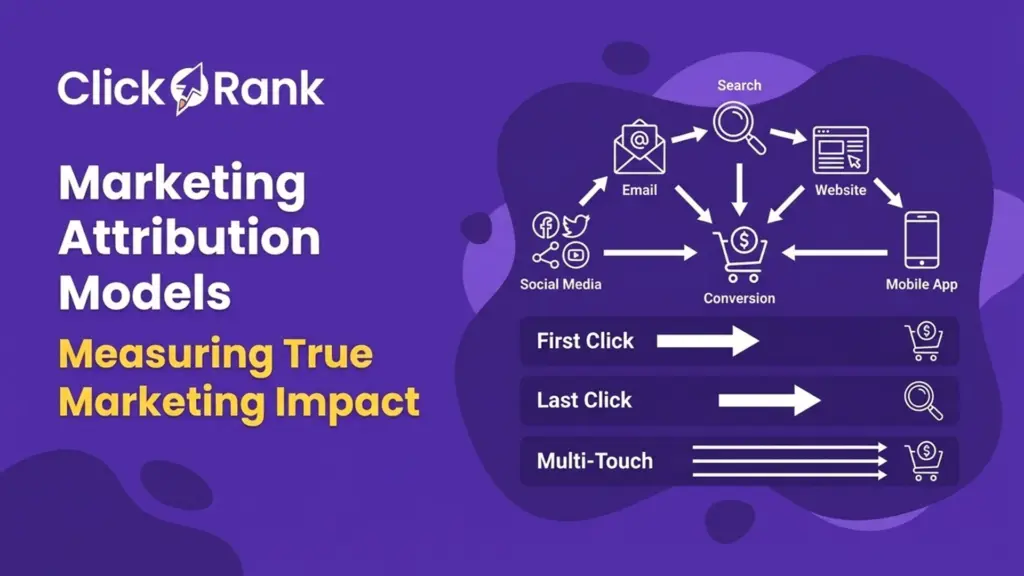

How can AI link SEO improvements to revenue and business outcomes?

AI integrates SEO data with ERP and CRM systems to map organic traffic to closed revenue. It uses Multi-Touch Attribution to assign value to content across the entire portfolio, proving that a blog post on a subsidiary site contributed to a sale on the main corporate domain.

This connectivity is vital for proving ROI. Often, a user creates a journey across multiple brand properties. They might read a review on your consumer site and buy on your B2B site. Traditional analytics miss this hand-off. AI-driven “Identity Resolution” connects these sessions, attributing the revenue back to the originating SEO effort. This proves the ecosystem value of the portfolio, justifying the maintenance costs of smaller, supporting sites that might appear “unprofitable” in isolation.

How does AI help identify high-impact sites and “under-performing” pages?

AI analyzes the “Revenue per Visit” (RPV) and “Engagement Velocity” of every page in the portfolio. It creates a “Scatter Plot” of assets: High Traffic/Low Value (Optimization Candidates), Low Traffic/High Value (Promotion Candidates), and Low Traffic/Low Value (Pruning Candidates). This prioritizes where the team should focus their energy.

How can predictive analytics forecast ROI across multiple website assets?

Predictive analytics models the potential lift of various SEO initiatives. It might predict that fixing speed issues on Site A will yield $50k, while creating new content for Site B will yield $200k. This allows the enterprise to allocate budget to the highest-yield projects, maximizing the ROI of the SEO spend across the diverse portfolio.

How can AI highlight opportunities for cross-site link building and content sharing?

AI scans the portfolio for “Internal Link Gaps.” It identifies opportunities where Site A mentions a topic that Site B is authoritative on, suggesting a cross-domain link. This strengthens the “Link Graph” of the entire network, passing authority from strong domains to weaker ones to lift the entire fleet.

Best Practices for Managing Multi-Site SEO with AI

Managing multi-site SEO with AI requires a centralized governance model where AI automates technical standards and monitoring, while human teams handle strategy and localization. It involves establishing a “Single Source of Truth” for data and using predictive analytics to prioritize resources based on global revenue impact rather than isolated site metrics.

How should enterprises prioritize SEO tasks across a diverse portfolio?

Prioritization should be based on “Business Impact Score,” a metric calculated by AI that combines search volume, current rank, and conversion potential. Tasks with the highest potential revenue impact are surfaced to the top of the queue, regardless of which site they belong to, ensuring resources flow to value.

What governance frameworks ensure consistency and quality at scale?

Implement a “Hub and Spoke” governance model supported by AI. The Central Hub sets the standards (schema, core vitals, brand voice) which are enforced by AI policies. Local Spokes execute strategy within these guardrails. Regular “AI Audits” ensure compliance, flagging any Spoke that drifts from the Hub’s standards.

How can teams avoid common mistakes when managing multi-site SEO?

Common mistakes include neglecting “Orphan Sites” (acquisitions that are forgotten), allowing technical debt to accumulate on legacy domains, and failing to localize content. AI prevents this by maintaining a comprehensive “Asset Register” that actively monitors every URL, ensuring no part of the portfolio is left behind or allowed to rot.

Stop managing by crisis and start managing by strategy. Try the one-click optimizer today and see how easy it is to maintain technical excellence across every site you own.

Can AI fully manage SEO across multiple websites without human oversight?

No. While AI can manage monitoring, technical fixes, and large-scale data analysis, human oversight is essential for strategy, creative direction, and stakeholder alignment. AI acts as the autopilot, but humans remain the pilot—setting goals, handling nuance, and making strategic decisions.

Why is ClickRank the best tool for enterprise multi-site SEO management?

ClickRank excels because it provides centralized, real-time control over unlimited domains. Its ability to deploy 1-Click Fixes across an entire site portfolio makes it an operational platform, not just a reporting tool—delivering speed, consistency, and scalability at enterprise level.

How often should multi-site SEO performance be monitored?

Technical health and critical errors should be monitored continuously (24/7) by AI systems. Strategic metrics such as rankings, traffic, and revenue impact should be reviewed weekly by regional teams and monthly by executive leadership to guide resource allocation.

Can AI help coordinate SEO teams across different time zones and languages?

Yes. AI platforms function as centralized collaboration hubs, translating insights and tasks into local languages and standardizing workflows. This enables seamless hand-offs between time zones and maintains continuous, 24-hour SEO operations.

How can AI detect SEO issues before they impact global rankings?

AI uses predictive anomaly detection to identify early warning signals—such as crawl-rate drops or rising server latency—before rankings decline. This proactive approach allows teams to fix root causes early, preventing SERP impact.

What are the key challenges in implementing AI for multi-site SEO?

The main challenges include data integration across multiple CMS and analytics tools, change management to build trust among local teams, and initial setup costs. However, the long-term efficiency, scalability, and revenue protection provided by platforms like ClickRank far outweigh these hurdles.