Search Engine Fundamentals explain how search engines crawl, index, rank, and understand web content to deliver relevant results. If you don’t understand these basics, SEO feels confusing and random. But once you know how search systems actually work, everything becomes clear and strategic.

This guide breaks down Search Engine Fundamentals in simple terms. You’ll learn how search engines discover pages, decide what to store, and choose what appears first. You’ll also understand how AI is changing modern search.

SEO Basics, where we explain how to optimize your website step by step. Here, we focus on the foundation how search engines operate behind the scenes.By the end, you’ll know how to align your content with how search systems truly work.

What Are Search Engine Fundamentals?

Search Engine Fundamentals are the core principles that explain how search engines crawl, index, rank, and understand web content. They describe the systems and processes that allow search engines to discover pages, analyze information, and deliver relevant results to users.

These fundamentals matter because SEO only works when you align with how search systems actually function. If you understand crawling, indexing, ranking signals, and query interpretation, you can make smarter decisions about content, structure, and technical setup. In today’s AI-driven search landscape, knowing these foundations is even more important.

When you master Search Engine Fundamentals, you stop guessing and start building pages that search engines can easily discover, understand, and trust.

What Is a Search Engine?

A search engine is a software system that finds, organizes, and ranks information from the web based on user queries. It scans billions of pages and returns the most relevant results in seconds.

Search engines use automated programs called crawlers to discover content. They store that content in massive databases called indexes. When someone types a query, ranking algorithms decide which pages best match the search intent.

Modern search engines do more than match keywords. They interpret meaning, context, and user behavior. This is why understanding Search Engine Fundamentals is essential for SEO. If your content is structured clearly and answers real questions, search systems can process it more effectively and rank it higher.

Why Were Search Engines Created in the First Place?

Search engines were created to organize the rapidly growing amount of information on the internet. As websites increased in the 1990s, users needed a way to quickly find relevant pages without manually browsing directories.

Early internet users struggled to locate useful information because there was no structured discovery system. Search engines solved this by automating content discovery and retrieval. They allowed people to type a question and receive instant results.

This innovation changed how we access knowledge. Today, search engines are not just tools they are gateways to information, products, services, and decisions. Understanding Search Engine Fundamentals helps you see why visibility in search is critical for businesses and creators.

How Have Search Engines Evolved Over Time?

Search engines have evolved from simple keyword-matching tools into intelligent AI-driven systems. Early systems focused on basic indexing, while modern engines understand context, intent, and relationships between entities.

The biggest shift happened when ranking moved beyond keyword density. Algorithms began evaluating authority, relevance, and quality signals. Over time, machine learning and natural language processing improved result accuracy.

Today, AI systems analyze user behavior, semantic meaning, and content usefulness. This evolution shows why Search Engine Fundamentals are not static. The core stages crawl, index, rank remain, but the intelligence behind them has become far more advanced.

How did early search engines like Archie and AltaVista work?

Early search engines like Archie and AltaVista worked by scanning file directories and indexing simple text-based information. They relied heavily on keyword matching. If a page repeated a term frequently, it had a better chance of ranking higher.

These systems did not understand meaning or context. They could not evaluate authority or trust. Results were often easy to manipulate because rankings depended mainly on keyword presence.

There was little filtering for quality. As the web grew, this approach became less effective. Users needed better relevance and cleaner results. This limitation opened the door for more advanced ranking systems that could evaluate links and authority.

What changed with Google’s PageRank innovation?

Google’s PageRank changed search by using backlinks as a measure of authority. Instead of relying only on keywords, it evaluated how many websites linked to a page and how trustworthy those linking sites were.

This shifted search from keyword repetition to authority-based ranking. A page with strong backlinks from reputable sources gained higher trust and better visibility. This improved result quality significantly.

PageRank introduced the idea that links act like votes. But not all votes are equal links from trusted sites carry more weight. This innovation laid the foundation for modern ranking systems and permanently changed Search Engine Fundamentals.

How has AI transformed modern search engines?

AI has transformed search engines by enabling them to understand meaning, context, and intent rather than just keywords. Machine learning models analyze patterns in language and user behavior to deliver more accurate results.

Modern systems can interpret conversational queries, synonyms, and complex questions. They also personalize results based on user location, history, and preferences. AI helps filter spam and low-quality content more effectively.

Today’s search engines act more like answer engines. They aim to solve problems directly. Understanding this shift is critical because content must now focus on clarity, depth, and usefulness rather than simple keyword targeting.

How Do Search Engines Actually Work?

Search engines work through a structured process of crawling, indexing, processing, and ranking content to deliver the best results for a query. These systems operate continuously, scanning the web, updating their databases, and refining rankings in real time.

Understanding this process is central to Search Engine Fundamentals because SEO depends on aligning with each stage. If your page cannot be crawled, it will not be indexed. If it is not indexed, it cannot rank. And if it lacks relevance or authority, it will not appear at the top.

Modern search engines combine automation, machine learning, and massive data systems to make this possible. Let’s break down exactly how this works step by step.

What Are the Three Core Stages of Search?

The three core stages of search are crawling, indexing, and ranking. Every search engine follows this structure, even though the technology behind it has become more advanced over time.

First, search engines crawl the web to discover pages. Second, they index those pages by analyzing and storing their content. Third, they rank indexed pages based on relevance, authority, and user signals.

These three stages form the backbone of Search Engine Fundamentals. If you understand them clearly, SEO becomes logical instead of mysterious. Each stage has specific technical requirements, and optimizing for all three ensures your content has the best chance of appearing in search results.

What Is Crawling?

Crawling is the process where search engine bots scan the web to discover new and updated pages. These automated programs follow links from one page to another, collecting data along the way.

Bots read HTML, analyze links, and identify new URLs. If your page is not linked internally or externally, it may never be discovered. That is why strong internal linking and XML sitemaps are essential.

Crawling does not guarantee ranking. It simply means the page has been found. In Search Engine Fundamentals, crawling is the first gate. If a page cannot be accessed due to technical errors, blocked directives, or poor structure, it will never move to the next stage.

What Is Indexing?

Indexing is the stage where discovered pages are analyzed and stored in a massive database. During this process, search engines evaluate content, keywords, structure, and signals to decide if the page deserves inclusion.

Not every crawled page gets indexed. Thin content, duplicate pages, or low-quality material may be ignored. Search engines extract meaning, identify entities, and understand the topic before storing the page in their index.

Think of the index as a digital library. Only approved and organized pages enter it. In Search Engine Fundamentals, indexing determines whether your content is eligible to compete in search results.

What Is Ranking?

Ranking is the process of ordering indexed pages based on relevance and quality for a specific query. When a user searches, algorithms instantly evaluate hundreds of signals to determine which results appear first.

Signals include content relevance, authority, backlinks, user behavior, freshness, and technical performance. Modern systems also use AI models to interpret intent and context.

Ranking happens in milliseconds. The search engine selects the most useful pages from its index and sorts them. In Search Engine Fundamentals, ranking is where competition occurs. Even if your page is indexed, it must outperform others to reach top positions.

What Happens Between Crawling and Ranking?

Between crawling and ranking, search engines render, process, analyze, filter, and store content in structured databases. This middle phase is often overlooked but is critical in Search Engine Fundamentals.

After a page is crawled, it goes through technical and semantic processing. The system renders JavaScript, extracts structured data, identifies keywords and entities, checks for duplicates, and evaluates quality signals.

Only after this deep processing does the page become fully eligible for ranking. If problems occur during rendering or quality evaluation, the page may be excluded from search results entirely.Understanding this hidden stage helps explain why some pages are crawled but never rank.

How does rendering work?

Rendering is the process where search engines load a page the way a browser would. This allows them to see JavaScript-generated content, images, and dynamic elements.

Modern websites often rely on JavaScript frameworks. If rendering fails, search engines may not see important content. This can prevent indexing or weaken ranking potential.

Search engines first crawl raw HTML, then use rendering systems to process scripts. This step ensures that the final visible content is evaluated accurately. In Search Engine Fundamentals, rendering bridges technical structure and content visibility.

How are pages processed and stored?

After rendering, search engines analyze the page’s structure, text, links, metadata, and structured data. They extract keywords, identify entities, and categorize the topic.

The processed information is stored in distributed databases designed for fast retrieval. Content is broken into searchable components rather than stored as simple full pages.

This structured storage allows instant matching when a user performs a query. In Search Engine Fundamentals, this processing stage ensures pages can be retrieved quickly and accurately when needed.

What filtering systems remove low-quality content?

Search engines use automated quality systems to filter spam, duplicate content, and manipulative pages. Machine learning models evaluate patterns such as keyword stuffing, unnatural links, and thin content.

Pages that fail quality checks may be excluded from indexing or ranked very low. These filters protect users from misleading or harmful information.

Modern filtering systems are constantly updated to fight new spam tactics. In Search Engine Fundamentals, quality control is essential because ranking systems only work effectively when low-quality content is minimized.

How Does Crawling Work in Detail?

Crawling works by sending automated bots across the web to discover, revisit, and update pages in search engine databases. It is the first and most critical step in Search Engine Fundamentals because without crawling, nothing can be indexed or ranked.

Search engines use advanced crawling systems that prioritize important pages, follow links, and respect website rules. They constantly decide which pages to visit, how often to revisit them, and how deeply to explore a site’s structure.

If your website has weak internal linking, blocked resources, or poor technical setup, crawlers may struggle to access key pages. Understanding crawling in detail allows you to remove barriers and ensure search engines can fully explore your content.

What Is a Search Engine Crawler?

A search engine crawler is an automated bot that scans websites to collect data for indexing. It systematically follows links, reads page code, and reports information back to search engine servers.

Crawlers operate continuously. They move from one URL to another, analyzing HTML, links, structured data, and metadata. They do not think like humans, but they follow logical pathways defined by site structure.

In Search Engine Fundamentals, crawlers are the discovery engine. If your site blocks bots unintentionally or creates broken links, valuable content may remain invisible. A clean site architecture ensures crawlers can efficiently explore and understand your website.

How Do Bots Discover New Pages?

Bots discover new pages mainly through links, sitemaps, and previously known URLs. Discovery begins when a crawler lands on a page and follows internal or external links to new destinations.

There are three main discovery methods:

- Internal links within your website

- Backlinks from other websites

- XML sitemaps submitted to search engines

If a page has no links pointing to it and is not included in a sitemap, it becomes difficult to find. In Search Engine Fundamentals, discoverability depends on connectivity. Strong internal linking and structured navigation make it easier for bots to reach deeper pages quickly.

What Role Do Links Play in Crawling?

Links act as pathways that guide crawlers from one page to another. Without links, bots cannot move through the web efficiently.

Internal links help search engines understand site structure and content hierarchy. External backlinks help crawlers discover your site from other domains. Anchor text also provides contextual clues about page topics.

Broken links waste crawl resources and can stop bots from reaching important content. In Search Engine Fundamentals, links are not just ranking signals they are navigation roads for crawlers. A well-structured linking strategy improves both discoverability and indexing speed.

How Do XML Sitemaps Influence Discovery?

XML sitemaps help search engines discover important pages more efficiently. They act as structured lists of URLs that site owners want crawled and indexed.

A sitemap does not guarantee indexing, but it improves visibility. It tells search engines which pages are priority, when they were last updated, and how frequently they change.

For large websites, sitemaps are especially valuable. They help bots locate deep pages that might not receive many internal links. In Search Engine Fundamentals, XML sitemaps serve as a guidance system that complements natural link-based discovery.

What Is Crawl Budget and Why Does It Matter?

Crawl budget is the number of pages a search engine bot is willing to crawl on your site within a given time. It matters most for large or complex websites.

Search engines allocate crawl resources based on site authority, speed, and health. If your site has many low-quality or duplicate pages, bots may waste time crawling unimportant URLs instead of valuable ones.

To optimize crawl budget:

- Remove duplicate pages

- Fix broken links

- Improve site speed

- Strengthen internal linking

In Search Engine Fundamentals, crawl efficiency ensures that important pages are discovered and updated quickly, especially on large-scale websites.

How Do Robots.txt and Meta Directives Control Crawling?

Robots.txt and meta directives tell search engines which pages they can or cannot crawl. These tools help control crawler behavior.

The robots.txt file sits at the root of a website and blocks or allows access to specific paths. Meta robots tags, placed inside page code, can instruct bots to “noindex” or “nofollow” certain pages.

Improper configuration can accidentally block valuable content. That is why careful setup is essential. In Search Engine Fundamentals, crawl control mechanisms protect sensitive pages while ensuring critical content remains accessible.

How Do Search Engines Handle JavaScript and Dynamic Content?

Search engines render JavaScript to understand dynamic content, but improper setup can still cause crawling issues. Modern websites often rely on frameworks that load content after the initial HTML.

Search engines first crawl raw HTML, then render scripts in a second processing stage. If JavaScript blocks essential text or links, crawlers may miss important content.

Best practices include:

- Server-side rendering when possible

- Avoiding hidden critical content

- Ensuring clean URL structures

In Search Engine Fundamentals, proper JavaScript handling ensures that dynamic websites remain fully crawlable and indexable.

What Happens During Indexing?

During indexing, search engines analyze, organize, and store web pages in structured databases so they can be retrieved instantly for relevant queries. This stage of Search Engine Fundamentals determines whether a page becomes eligible to rank at all.

Indexing is not automatic approval. After crawling, search engines evaluate content quality, structure, duplication, and technical signals before deciding to include a page in their index. Pages that fail quality or technical checks may be excluded.

Think of indexing as adding a book to a searchable library. If the content is unclear, duplicated, or low value, it may not be cataloged. Understanding this process helps you create pages that are clean, structured, and worthy of inclusion.

What Is an Index in Search Engines?

An index in search engines is a massive digital database that stores processed and organized web content. It allows search engines to retrieve results in milliseconds when users type a query.

Instead of scanning the entire web each time someone searches, engines pull results from this pre-built index. Pages are stored with structured information such as keywords, entities, metadata, and contextual signals.

In Search Engine Fundamentals, the index acts like a searchable map of the web. If your page is not indexed, it cannot appear in search results. That is why technical health, clear structure, and strong content quality are essential for visibility.

How Is Content Analyzed Before Being Indexed?

Before indexing, search engines analyze content for meaning, structure, quality, and uniqueness. They break down text, identify topics, detect duplication, and evaluate usefulness.

This analysis ensures that only valuable and relevant pages enter the index. Systems examine headings, body content, internal links, media elements, and structured data. They also assess technical signals like page speed and mobile compatibility.

In Search Engine Fundamentals, content analysis determines how well a page matches future queries. The better your content is structured and aligned with intent, the more accurately it can be categorized and stored for retrieval.

How are keywords extracted?

Search engines extract keywords by scanning page content, titles, headings, anchor text, and metadata. They identify frequently used terms and analyze their placement to understand topic focus.

However, modern systems do not rely only on repetition. They evaluate context and semantic relationships between words. Synonyms and related phrases are also recognized.

Keyword extraction helps categorize pages and match them to relevant queries. In Search Engine Fundamentals, natural keyword usage matters more than density. Clear structure and topic consistency improve accurate extraction and indexing.

How are entities identified?

Search engines identify entities by detecting people, places, brands, products, and concepts within content. They connect these entities to larger knowledge databases to understand relationships.

For example, if a page mentions a company, location, and product type, search engines link those entities together to interpret context. This goes beyond keywords and focuses on meaning.

Entity recognition strengthens semantic understanding. In Search Engine Fundamentals, clearly defined entities improve indexing accuracy and help search engines match content to complex queries.

How is content quality evaluated?

Content quality is evaluated through automated systems that analyze originality, depth, clarity, and user value. Search engines assess whether the page provides helpful, trustworthy information.

Thin content, excessive ads, misleading claims, or copied material may trigger quality filters. User behavior signals, such as engagement and bounce patterns, may also influence quality perception.

High-quality content increases the chance of successful indexing and stronger ranking potential. In Search Engine Fundamentals, quality evaluation ensures that search results remain useful and reliable for users.

How Do Search Engines Handle Duplicate Content?

Search engines handle duplicate content by selecting one preferred version and filtering out others. They do not usually penalize duplication directly, but they avoid indexing multiple identical pages.

When similar pages exist, search engines cluster them and choose a canonical version. This prevents search results from showing repeated content.

Duplicate content can occur due to URL parameters, pagination, or content reuse across domains. In Search Engine Fundamentals, managing duplication ensures that ranking signals are consolidated instead of divided across multiple versions.

What Is Canonicalization?

Canonicalization is the process of telling search engines which version of a page is the main or preferred one. This is done using canonical tags in the page’s HTML.

When multiple URLs contain similar content, the canonical tag directs search engines to index and rank the chosen version. This consolidates authority signals and prevents confusion.

Without proper canonicalization, ranking strength may split between duplicates. In Search Engine Fundamentals, canonical tags help maintain clarity and ensure search engines understand which page represents the original source.

What Makes a Page Ineligible for Indexing?

A page becomes ineligible for indexing if it violates quality guidelines, contains technical errors, or includes blocking directives. Several factors can prevent inclusion in the index.

Common reasons include:

- “Noindex” meta tags

- Blocked URLs in robots.txt

- Thin or duplicate content

- Severe technical errors

- Manual spam actions

Even if crawled, a page may not qualify for indexing if it lacks value. In Search Engine Fundamentals, ensuring technical health and strong content quality is essential to remain index-eligible.

How Does Structured Data Help Search Engines Understand Content?

Structured data helps search engines understand page content by providing clear, machine-readable information about entities and relationships. It uses standardized formats like schema markup.

Structured data clarifies whether content represents a product, article, event, organization, or review. This improves indexing accuracy and increases eligibility for rich results.

For example, adding product schema can help search engines display pricing and ratings directly in search results. In Search Engine Fundamentals, structured data strengthens content interpretation and enhances visibility opportunities.

How Do Search Engines Rank Results?

Search engines rank results by evaluating relevance, authority, quality, and user context through complex algorithms. Ranking is the final and most competitive stage in Search Engine Fundamentals because this is where pages fight for top visibility.

When a user enters a query, search systems instantly analyze indexed pages and apply multiple ranking systems. These systems consider content match, backlink strength, user behavior, freshness, and personalization signals. The goal is simple: deliver the most helpful result for that specific search.

Ranking is dynamic. It changes based on query type, device, location, and intent. Understanding how ranking works allows you to create content that aligns with how search systems evaluate and prioritize pages.

What Is a Ranking Algorithm?

A ranking algorithm is a set of mathematical rules and systems that determine the order of search results. It evaluates hundreds of signals to decide which page deserves the top position.

The algorithm does not rely on one single factor. Instead, it weighs multiple signals such as content relevance, page quality, authority, user engagement, and technical performance. Machine learning models also refine rankings over time.

In Search Engine Fundamentals, ranking algorithms are constantly updated. This means SEO is not about shortcuts but about building long-term quality, relevance, and trust that align with evolving systems.

What Are Ranking Signals vs Ranking Systems?

Ranking signals are individual factors used to evaluate pages, while ranking systems are the broader frameworks that process those signals. Signals are measurable elements like backlinks or page speed. Systems are the AI-driven mechanisms that interpret them.

For example, a backlink is a signal. A link evaluation system analyzes its quality and relevance. Content length may be a signal, but a content quality system determines its usefulness.

Understanding this difference matters in Search Engine Fundamentals. Optimizing one signal in isolation rarely works. You must align with the full system that interprets those signals collectively.

How Does Relevance Get Determined?

Relevance is determined by how closely a page matches the intent and meaning behind a search query. Modern search engines analyze more than keywords; they evaluate context and semantic relationships.

Relevance factors include:

- Keyword placement in titles and headings

- Topic depth and clarity

- Entity relationships

- Query intent alignment

Search systems compare the query with indexed content using semantic analysis and AI models. In Search Engine Fundamentals, relevance is the first filter. If a page does not directly answer the query, it cannot rank well no matter how strong its authority is.

How Is Authority Measured?

Authority is measured through trust signals such as backlinks, brand recognition, and content credibility. Search engines interpret links from other websites as endorsements.

Not all links are equal. Links from trusted, relevant domains carry more weight than low-quality or spammy ones. Brand mentions and consistent topic expertise also contribute to authority perception.

In Search Engine Fundamentals, authority builds over time. It cannot be faked easily. Publishing consistent, valuable content and earning natural backlinks strengthens ranking potential significantly.

How Do User Signals Influence Rankings?

User signals influence rankings by showing how people interact with search results. These signals may include click-through rate, dwell time, and engagement patterns.

If users frequently click a result and stay on the page, search systems may interpret it as helpful. If users quickly return to the results page, the content may be seen as less useful.

While user signals are not direct ranking factors in isolation, they help refine systems over time. In Search Engine Fundamentals, creating satisfying content improves behavioral signals and strengthens ranking stability.

How Does Freshness Affect Results?

Freshness affects rankings when queries require up-to-date information. For time-sensitive searches, newer content often receives priority.

Search engines analyze publishing dates, update frequency, and content changes. However, freshness only matters when relevant. For evergreen topics, authority and depth may outweigh recency.

In Search Engine Fundamentals, updating content strategically keeps it competitive. Regular improvements signal that the page remains accurate and useful for users.

How Does Personalization Impact Rankings?

Personalization impacts rankings by adjusting results based on user location, search history, and preferences. Two users searching the same term may see slightly different results.

Location influences local queries. Search history may shape recurring topic results. Device type can also affect ranking presentation.

Personalization does not replace core ranking systems, but it fine-tunes results for individuals. In Search Engine Fundamentals, this means SEO should focus on broad relevance and quality while understanding that final rankings may vary slightly between users.

How Do Search Engines Understand Search Queries?

Search engines understand search queries by analyzing intent, context, language patterns, and user behavior. They do not simply match keywords. Instead, modern systems interpret what the user actually wants to achieve.

In Search Engine Fundamentals, query understanding is critical because ranking depends on intent alignment. A page may contain the right keywords, but if it does not match user intent, it will not rank well. Search engines use natural language processing, machine learning models, and behavioral data to decode meaning.

Today’s systems analyze synonyms, entities, location, device type, and past searches. The goal is simple: return results that solve the user’s problem as accurately as possible.

What Is Search Intent?

Search intent is the underlying goal or purpose behind a user’s query. It explains why someone searches, not just what they type.

Search engines classify intent to deliver the most relevant results. Understanding intent is one of the most important parts of Search Engine Fundamentals because it shapes content strategy, page structure, and ranking potential.

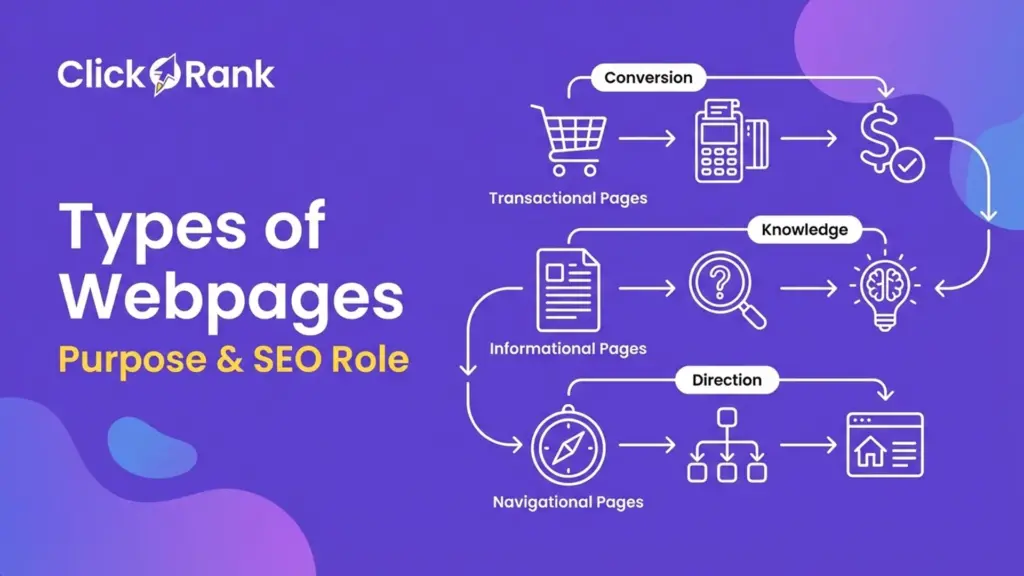

There are four main types of search intent: informational, navigational, transactional, and commercial investigation. Each requires a different content format. If your page aligns directly with the user’s intent, ranking becomes much more likely.

What Is Informational Intent?

Informational intent occurs when a user wants to learn something or find an answer. These searches often begin with words like “how,” “what,” or “why.”

Search engines prioritize detailed guides, tutorials, and educational content for informational queries. Content depth, clarity, and structure matter more than sales language.

In Search Engine Fundamentals, informational intent is common at the awareness stage. Providing clear explanations, structured headings, and helpful examples increases the chance of ranking for these searches.

What Is Navigational Intent?

Navigational intent happens when a user wants to visit a specific website or brand. For example, searching for a company name usually indicates this intent.

Search engines recognize brand queries and prioritize official pages or well-known resources. Strong brand signals and authority improve visibility for these searches.

In Search Engine Fundamentals, navigational intent shows the importance of brand building. If users actively search for your brand, it strengthens your credibility and overall search presence.

What Is Transactional Intent?

Transactional intent occurs when a user is ready to take action, such as buying a product or signing up for a service. These queries often include words like “buy,” “discount,” or “order.”

Search engines prioritize product pages, service pages, and ecommerce listings for transactional queries. Clear calls to action and strong trust signals improve performance.

In Search Engine Fundamentals, aligning with transactional intent requires optimized product descriptions, structured data, and smooth user experience to support conversions.

What Is Commercial Investigation Intent?

Commercial investigation intent appears when users compare options before making a decision. Searches often include terms like “best,” “review,” or “vs.”

Search engines display comparison guides, review articles, and in-depth evaluations for these queries. Authority and detailed analysis matter here.

In Search Engine Fundamentals, targeting commercial investigation intent requires balanced, informative content that helps users evaluate choices confidently.

How Do Search Engines Interpret Context?

Search engines interpret context by analyzing surrounding words, user location, device type, and previous search behavior. Context helps clarify meaning when queries are short or vague.

For example, searching “apple” could refer to a fruit or a technology brand. Search engines examine related terms, past behavior, and trending data to determine the correct interpretation.

In Search Engine Fundamentals, contextual analysis ensures that results match real-world meaning. This is why writing clearly and using related terms naturally improves content understanding and ranking accuracy.

What Is Query Rewriting?

Query rewriting is the process where search engines modify or expand a user’s query to improve result accuracy. This happens automatically behind the scenes.

Search engines may add synonyms, correct spelling errors, or expand abbreviations. For example, a search for “cheap hotels NYC” may be rewritten internally to include “affordable hotels in New York City.”

In Search Engine Fundamentals, query rewriting shows that exact keyword matching is no longer necessary. Semantic clarity and topic coverage matter more than repeating identical phrases.

How Do Machine Learning Models Interpret Ambiguous Queries?

Machine learning models interpret ambiguous queries by analyzing patterns, intent signals, and large datasets of user behavior. These models learn from billions of searches to predict likely meanings.

When a query has multiple possible interpretations, AI systems evaluate context clues and historical patterns. They may also test result performance and adjust rankings based on user interaction.

In Search Engine Fundamentals, AI-driven interpretation improves accuracy over time. This means content must focus on clarity, entity definition, and strong topic relevance to reduce ambiguity and improve ranking potential.

How Has AI Changed Search Engine Fundamentals?

AI has transformed Search Engine Fundamentals by improving how search engines understand language, intent, and content relationships. Instead of relying only on keywords and backlinks, modern systems use machine learning models to interpret meaning and context at scale.

Artificial intelligence now influences crawling priorities, indexing decisions, ranking evaluation, and query understanding. Search engines learn from patterns in user behavior and content performance to refine results continuously.

This shift means SEO is no longer just technical optimization. It requires clarity, topic depth, and strong entity alignment. AI-driven search systems aim to deliver the most helpful result, not just the most optimized page. Understanding these AI changes is essential for staying competitive in modern search.

What Is RankBrain?

RankBrain is a machine learning system that helps search engines interpret unfamiliar or complex queries. It analyzes patterns and predicts which pages are most relevant when exact keyword matches are not enough.

RankBrain focuses on understanding relationships between words and concepts. When users type rare or ambiguous queries, this system estimates intent based on similar past searches.

In Search Engine Fundamentals, RankBrain marked a major shift toward AI-based ranking. It showed that search engines could learn and adapt instead of relying only on fixed rules. This makes content clarity and intent alignment more important than keyword repetition.

What Is BERT and Why Does It Matter?

BERT is a natural language processing model that helps search engines understand the context of words in a sentence. It analyzes how words relate to each other instead of reading them individually.

For example, small words like “for” or “to” can change the meaning of a query. BERT helps search engines interpret these nuances accurately. This improves results for conversational and long-tail searches.

In Search Engine Fundamentals, BERT strengthened semantic understanding. It rewards content written naturally and clearly. Over-optimized or awkward keyword placement is less effective because systems now prioritize meaning over repetition.

What Is Neural Matching?

Neural matching is an AI system that connects queries with related concepts, even if exact keywords do not appear. It focuses on topic similarity rather than direct phrase matching.

If a user searches for “why does my phone battery drain fast,” neural matching can connect it to pages about battery optimization, even if the wording differs.

In Search Engine Fundamentals, neural matching enhances topic-based relevance. It allows search engines to interpret broader meaning and improve result accuracy. This reinforces the importance of comprehensive, well-structured content that fully covers a subject.

What Is MUM?

MUM (Multitask Unified Model) is an advanced AI system designed to understand complex, multi-part questions across different formats. It can process text, images, and other content types simultaneously.

MUM helps search engines answer layered queries that require deeper reasoning. It can connect related topics and generate more detailed insights.

In Search Engine Fundamentals, MUM represents the move toward multimodal and AI-driven search. It signals that content must be thorough, accurate, and context-rich to remain competitive in evolving search systems.

How Do AI Models Improve Query Understanding?

AI models improve query understanding by analyzing intent, context, and semantic relationships between words. They learn from large datasets to predict what users truly want.

These models evaluate language patterns, past interactions, and search trends. They also refine results based on performance data over time. This creates a feedback loop where systems continuously improve accuracy.

In Search Engine Fundamentals, AI-driven understanding reduces dependence on exact keywords. Content must focus on solving problems clearly and completely, using natural language and structured formatting.

How Do Generative Search Systems Work?

Generative search systems use AI models to create summarized answers directly within search results. They combine indexed data with large language models to generate responses.

Instead of listing only links, generative systems may provide synthesized explanations pulled from multiple sources. However, they still rely on indexed web content for training and validation.

In Search Engine Fundamentals, generative systems increase the importance of authority and structured clarity. Well-organized, trustworthy content is more likely to be cited or referenced in AI-generated responses.

What Is the Role of Information Retrieval in Search?

Information Retrieval (IR) is the system that helps search engines find and match the most relevant documents to a user’s query. It is one of the core technical layers behind Search Engine Fundamentals because it controls how results are selected from the index.

When someone types a search, IR systems scan millions of stored pages and calculate which ones are most relevant. They use mathematical models, weighting systems, and semantic analysis to compare queries with indexed content.

Without Information Retrieval, ranking would not be possible. It acts as the bridge between indexing and ranking. Understanding this layer helps you see why keyword clarity, topic focus, and structured content matter in modern search environments.

What Is Information Retrieval (IR)?

Information Retrieval (IR) is the process of identifying and retrieving relevant documents from a large database based on a search query. It powers the matching stage in search engines.

IR systems analyze both the query and stored documents. They calculate similarity scores and determine which pages should move forward into the ranking phase. This is done using statistical and semantic models.

In Search Engine Fundamentals, IR is the filtering engine. It narrows billions of indexed pages into a smaller set of candidates. If your content is not clearly aligned with searchable terms and concepts, it may never pass this retrieval stage.

How Does Vector Space Modeling Work?

Vector Space Modeling works by representing documents and queries as mathematical vectors in a multi-dimensional space. Each word or term contributes to the document’s position within that space.

When a query is entered, the system converts it into a vector as well. It then calculates the distance or similarity between the query vector and document vectors. The closer they are, the more relevant the page is considered.

In Search Engine Fundamentals, vector modeling allows more flexible matching than simple keyword comparison. It measures overall topic similarity, helping search engines retrieve documents even when wording differs slightly.

What Is Term Frequency–Inverse Document Frequency (TF-IDF)?

TF-IDF is a statistical method that measures how important a word is within a document compared to the entire index. It balances term frequency with rarity across all documents.

Term Frequency (TF) calculates how often a word appears on a page. Inverse Document Frequency (IDF) reduces the weight of very common words. Together, they highlight meaningful terms that define a topic.

In Search Engine Fundamentals, TF-IDF was an early breakthrough in improving relevance scoring. While modern systems now use more advanced semantic models, TF-IDF still represents the foundation of keyword weighting in information retrieval systems.

How Do Modern Semantic Retrieval Systems Work?

Modern semantic retrieval systems work by understanding meaning rather than relying only on exact keyword matches. They analyze relationships between words, topics, and entities.

Instead of matching identical phrases, semantic systems evaluate context. For example, a search about “car repair tips” can retrieve content discussing “vehicle maintenance advice” because the meaning overlaps.

In Search Engine Fundamentals, semantic retrieval improves result accuracy for natural language queries. It reduces dependency on exact keyword usage and rewards content that covers a topic comprehensively and clearly.

How Do Search Engines Use Embeddings?

Search engines use embeddings to represent words, sentences, and documents as numerical patterns that capture meaning. These embeddings allow systems to compare semantic similarity at scale.

An embedding converts language into a mathematical structure. Queries and pages are mapped into the same space, making it easier to measure meaning overlap rather than just word overlap.

In Search Engine Fundamentals, embeddings power advanced AI systems like neural matching and generative search. They allow search engines to interpret complex language and retrieve content that best satisfies user intent, even when wording varies significantly.

What Is the Knowledge Graph and Entity-Based Search?

The Knowledge Graph and entity-based search allow search engines to understand real-world things (entities) and their relationships, not just keywords. This layer of Search Engine Fundamentals shifts search from text matching to meaning understanding.

Instead of treating content as isolated words, search engines identify people, places, brands, products, and concepts as entities. They then connect these entities in structured databases. This helps deliver smarter, more accurate results.

Entity-based search improves answer quality, reduces ambiguity, and powers features like knowledge panels. Understanding this system helps you structure content clearly, define entities properly, and strengthen semantic relevance in modern SEO strategies.

What Is an Entity?

An entity is a clearly defined real-world object such as a person, company, location, product, or concept. Unlike keywords, entities have unique identities and attributes.

For example, a company name is an entity. A city is an entity. Even abstract ideas like “machine learning” can be treated as entities if they have defined meaning and connections.

In Search Engine Fundamentals, entities help search engines understand context. Instead of matching words blindly, systems recognize who or what is being discussed. This reduces confusion and improves relevance, especially for short or ambiguous queries.

How Do Search Engines Connect Entities?

Search engines connect entities by analyzing relationships between them and mapping those connections in structured databases. They identify patterns across billions of pages to determine how entities interact.

For example, a company may be linked to its founder, headquarters, products, and industry. These connections help search engines understand context more deeply.

In Search Engine Fundamentals, entity relationships strengthen semantic accuracy. When content clearly explains how entities relate, it becomes easier for search engines to interpret meaning and match results to complex user queries.

What Is the Knowledge Graph?

The Knowledge Graph is a massive database that stores entities and their relationships in a structured format. It allows search engines to present factual information directly in search results.

When you see a knowledge panel with details about a person, company, or place, that information often comes from the Knowledge Graph. It organizes verified data into connected nodes.

In Search Engine Fundamentals, the Knowledge Graph supports entity-based search. It enhances result quality by linking content to real-world facts. Websites that clearly define entities and provide structured information are more likely to be associated with these knowledge systems.

How Does Entity-Based Indexing Improve Accuracy?

Entity-based indexing improves accuracy by organizing content around concepts and relationships instead of isolated keywords. This helps search engines interpret deeper meaning.

If multiple pages mention similar entities and relationships, search systems can cluster them by topic rather than exact phrase usage. This reduces ranking errors caused by wording differences.

In Search Engine Fundamentals, entity-based indexing strengthens semantic search. It ensures that results reflect true intent and context, making content depth and clarity more important than keyword repetition.

How Does Structured Data Support Entity Recognition?

Structured data supports entity recognition by providing machine-readable information that clearly defines page elements. It uses standardized formats to label entities and their properties.

For example, structured data can specify that a page represents a product, article, organization, or event. This removes ambiguity and improves search engine interpretation.

In Search Engine Fundamentals, structured data acts as a clarity tool. It strengthens entity recognition, increases eligibility for rich results, and helps search engines connect your content with broader knowledge systems.

How Do Search Engines Combat Spam?

Search engines combat spam by using automated systems, AI models, and manual reviews to detect and filter low-quality or manipulative content. Protecting search quality is a critical part of Search Engine Fundamentals because ranking systems only work when spam is minimized.

Spam attempts to manipulate rankings using deceptive tactics like keyword stuffing, fake backlinks, hidden text, or copied content. If search engines did not fight spam aggressively, users would lose trust in search results.

Modern search engines combine machine learning systems with human quality reviewers. They analyze link patterns, content signals, and behavioral data to identify suspicious activity. Understanding these anti-spam systems helps you avoid risky tactics and build sustainable, long-term visibility.

What Is Web Spam?

Web spam is any attempt to manipulate search rankings using deceptive or low-quality tactics. It includes practices designed to trick search engines instead of helping users.

Common spam techniques include:

- Keyword stuffing

- Cloaking (showing different content to bots)

- Link schemes

- Auto-generated thin content

Web spam harms user experience and reduces search quality. In Search Engine Fundamentals, spam detection protects the integrity of ranking systems. Websites that focus on genuine value and ethical optimization are far less likely to be negatively affected.

What Are Manual Actions?

Manual actions are penalties applied by human reviewers when a website violates search engine guidelines. These actions occur after a manual review confirms spammy behavior.

If a site receives a manual action, certain pages or the entire domain may lose visibility in search results. The website owner is usually notified through search console tools and given instructions to fix the issue.

In Search Engine Fundamentals, manual actions act as enforcement mechanisms. They are less common than algorithmic filters but more severe. Recovery requires correcting violations and submitting a reconsideration request.

What Are Algorithmic Penalties?

Algorithmic penalties are automatic ranking adjustments triggered by spam detection systems. Unlike manual actions, these do not involve human review.

When a site violates quality standards, automated systems may reduce rankings or ignore certain signals, such as low-quality backlinks. These penalties often happen during algorithm updates.

In Search Engine Fundamentals, algorithmic systems constantly evaluate content quality and link integrity. Because these systems are automated and ongoing, consistent compliance with guidelines is the safest long-term strategy.

What Is SpamBrain?

SpamBrain is an AI-based spam detection system designed to identify and neutralize spam patterns. It uses machine learning to detect manipulative tactics more effectively than rule-based systems.

SpamBrain analyzes link networks, content patterns, and suspicious behaviors. It can detect large-scale spam schemes and adapt to new tactics over time.

In Search Engine Fundamentals, AI-driven systems like SpamBrain represent a major advancement in search protection. They reduce the impact of link spam and low-quality tactics, making ethical SEO and strong content quality more important than ever.

How Do Link Spam Detection Systems Work?

Link spam detection systems analyze backlink patterns to identify unnatural or manipulative link building. They evaluate link sources, anchor text patterns, and domain authority relationships.

If a site acquires many low-quality or irrelevant backlinks, detection systems may ignore those links or reduce their value. In severe cases, ranking impact may occur.

Modern systems focus on neutralizing spam rather than punishing sites harshly. In Search Engine Fundamentals, earning natural, relevant backlinks is the safest approach. Quality links from trusted sources strengthen authority, while artificial link schemes increase risk.

How Do Search Engines Deliver Results on the SERP?

Search engines deliver results on the SERP by combining organic listings, paid ads, featured elements, and structured data outputs. The SERP (Search Engine Results Page) is where all ranking decisions become visible to users.

In modern Search Engine Fundamentals, the SERP is not just a list of blue links. It includes featured snippets, knowledge panels, rich results, local packs, and ads. Each element is triggered based on query intent and content structure.

Search engines dynamically assemble the SERP in real time. They evaluate relevance, authority, and format eligibility before displaying results. Understanding how SERPs are structured helps you optimize not just for ranking, but also for visibility within different result types.

What Is a SERP (Search Engine Results Page)?

A SERP is the page that displays results after a user enters a search query. It contains all the links, features, and information returned by the search engine.

SERPs vary depending on the query. Informational searches may show featured snippets or knowledge panels, while transactional searches may highlight product listings or ads.

In Search Engine Fundamentals, the SERP represents the final output of crawling, indexing, and ranking systems. Studying SERP layouts for your target keywords helps you understand what format search engines expect and prioritize.

What Are Organic Results?

Organic results are unpaid listings that appear based on ranking algorithms. They are earned through relevance, authority, and content quality rather than advertising spend.

Organic results typically include a page title, URL, and meta description. Their position depends on how well the page aligns with query intent and ranking signals.

In Search Engine Fundamentals, organic results are the core outcome of SEO efforts. Strong technical optimization, high-quality content, and authoritative backlinks increase the chance of securing top organic positions.

What Are Featured Snippets?

Featured snippets are highlighted answer boxes that appear at the top of the SERP. They provide direct answers extracted from web pages.

Search engines select snippet content that clearly answers a question in a structured format. Paragraph summaries, lists, and tables are commonly used.

In Search Engine Fundamentals, featured snippets reward clarity and direct answering. Structuring content with concise definitions and well-formatted sections increases the chance of being selected for this prime visibility spot.

What Are Knowledge Panels?

Knowledge panels are informational boxes that display key facts about entities such as people, companies, or places. They appear on the side or top of the SERP.

These panels pull information from structured databases and entity systems. They often include images, summaries, related entities, and verified data.

In Search Engine Fundamentals, knowledge panels rely on entity recognition and authoritative sources. Building a strong brand presence and using structured data increases the likelihood of being associated with these panels.

What Are Rich Results?

Rich results are enhanced search listings that include additional visual or interactive elements. These may display ratings, prices, images, FAQs, or event details.

Rich results are enabled by structured data markup. When search engines clearly understand page elements, they may display enhanced features to improve user experience.

In Search Engine Fundamentals, rich results improve click-through rates by making listings more visible and informative. Implementing accurate schema markup strengthens eligibility for these enhanced SERP features.

How Do Ads Differ from Organic Results?

Ads differ from organic results because they are paid placements rather than earned rankings. Advertisers bid on keywords to appear at the top or bottom of the SERP.

Ads are marked as sponsored and are ranked based on bid amount, quality score, and ad relevance. Organic results, in contrast, rely on algorithmic evaluation.

In Search Engine Fundamentals, both ads and organic results can appear together on the SERP. However, long-term sustainable visibility comes from strong organic positioning supported by quality content and technical excellence.

How Do Search Engines Evaluate Content Quality?

Search engines evaluate content quality by analyzing trust, expertise, originality, usefulness, and user satisfaction signals. Quality evaluation is a central part of Search Engine Fundamentals because ranking systems prioritize helpful and reliable content.

Modern search engines use AI systems, quality guidelines, and behavioral data to determine whether a page genuinely benefits users. They look at author credibility, topic depth, structure, engagement patterns, and site reputation.

Low-quality content may still get crawled and indexed, but it will struggle to rank competitively. High-quality content, on the other hand, earns trust and long-term visibility. Understanding how search engines assess quality allows you to create pages that meet both algorithmic and human expectations.

What Is E-E-A-T?

E-E-A-T stands for Experience, Expertise, Authoritativeness, and Trustworthiness. It is a framework used to evaluate content credibility and reliability.

Experience means the content reflects real-world knowledge. Expertise refers to subject knowledge depth. Authoritativeness comes from reputation and recognition. Trustworthiness focuses on accuracy and transparency.

In Search Engine Fundamentals, E-E-A-T influences how ranking systems assess page quality. Clear author information, accurate data, reputable backlinks, and strong brand presence all strengthen E-E-A-T signals and improve ranking stability over time.

How Do Search Engines Measure Expertise?

Search engines measure expertise by analyzing content depth, topic coverage, author credentials, and site reputation. They look for signals that show genuine knowledge.

Pages that explain concepts clearly, provide accurate details, and cover topics thoroughly are more likely to be considered expert-level. Author bios, citations, and external references strengthen credibility.

In Search Engine Fundamentals, expertise is not based on claims alone. It is reflected in structured, accurate, and comprehensive content. Consistent publishing within a focused niche also builds long-term expertise recognition.

How Is Content Helpfulness Assessed?

Content helpfulness is assessed by evaluating how well a page satisfies user intent and solves the searcher’s problem. Search engines analyze clarity, depth, structure, and engagement patterns.

Helpful content answers questions directly, avoids fluff, and provides actionable guidance. Poorly structured or misleading content often results in low engagement signals.

In Search Engine Fundamentals, helpfulness is closely tied to user satisfaction. Clear formatting, logical flow, and complete answers increase the likelihood of strong ranking performance and sustained visibility.

What Is Topical Authority?

Topical authority refers to a website’s overall expertise and credibility within a specific subject area. It is built through consistent, high-quality content focused on a defined niche.

Search engines evaluate how comprehensively a site covers related subtopics and whether it provides depth across a subject. Internal linking between related pages strengthens topical signals.

In Search Engine Fundamentals, topical authority improves ranking consistency. Instead of isolated articles, building interconnected content clusters signals subject mastery and increases long-term trust in search systems.

How Do Core Updates Affect Rankings?

Core updates are broad algorithm changes that refine how search engines evaluate content quality and relevance. They often impact rankings across multiple industries.

These updates do not target individual sites specifically. Instead, they improve ranking systems to better identify helpful and authoritative content. Pages that rely on thin content or weak authority may drop after updates.

In Search Engine Fundamentals, adapting to core updates requires focusing on quality, expertise, and user value rather than short-term tactics. Continuous improvement ensures resilience against algorithm changes.

How Do Different Search Engines Compare?

Different search engines compare based on their ranking systems, data sources, user intent focus, and AI integration. While the core Search Engine Fundamentals crawl, index, retrieve, and rank remain similar, each platform applies them differently.

Some engines prioritize backlinks heavily. Others rely more on user engagement or transactional behavior. Video platforms, ecommerce engines, and AI-powered search tools all interpret queries in unique ways.

Understanding these differences helps you optimize content more strategically. What works for a traditional web search engine may not perform the same way on a video or product-based platform. Let’s break down how major systems differ and what that means for visibility.

How Is Google Different from Bing?

Google and Bing differ mainly in ranking signals, AI implementation, and market focus. Both follow Search Engine Fundamentals, but their weighting systems vary.

Google places strong emphasis on semantic relevance, authority signals, and AI-driven query interpretation. Bing often weighs social signals and multimedia integration more heavily. Google’s index is larger and frequently updated, while Bing may index certain multimedia content differently.

In practical SEO terms, high-quality content and backlinks matter on both platforms. However, structured data, clear metadata, and multimedia optimization may have slightly stronger influence in Bing’s ecosystem. Optimizing for both ensures broader reach.

How Does YouTube Function as a Search Engine?

YouTube functions as a search engine by indexing and ranking video content based on relevance and engagement signals. It follows similar Search Engine Fundamentals but applies them to video format.

Instead of backlinks, YouTube relies heavily on watch time, click-through rate, audience retention, and engagement metrics like comments and likes. Titles, descriptions, and tags still influence discoverability.

YouTube prioritizes videos that keep users on the platform longer. This means strong hooks, structured content flow, and consistent uploads matter significantly. Understanding YouTube as a search engine helps content creators optimize beyond simple keyword usage.

How Does Amazon Search Work?

Amazon search works by prioritizing products most likely to convert into sales. While it uses indexing and retrieval systems similar to web search engines, its ranking focus is transaction-driven.

Amazon’s algorithm considers factors such as:

- Sales velocity

- Conversion rate

- Product reviews

- Pricing competitiveness

- Keyword relevance

Unlike traditional search engines, authority is less about backlinks and more about sales performance. In ecommerce environments, optimizing for Amazon means improving product listings, images, descriptions, and review credibility to boost ranking visibility.

How Do AI Search Engines Differ from Traditional Search?

AI search engines differ from traditional search by generating summarized answers instead of only listing ranked links. They combine indexing systems with large language models to provide synthesized responses.

Traditional search displays ranked pages for users to explore. AI-powered search systems analyze multiple sources and generate conversational outputs. However, they still rely on indexed web data.

In Search Engine Fundamentals, AI search expands the importance of clarity, authority, and structured content. Pages that are well-organized and trustworthy are more likely to be cited or referenced in AI-generated responses. This shift rewards comprehensive and accurate content creation.

What Are Common Misconceptions About Search Engines?

Common misconceptions about search engines often come from outdated SEO advice or oversimplified explanations. Understanding the truth behind these myths is essential to fully grasp Search Engine Fundamentals.

Many people believe ranking is controlled by a fixed checklist of factors, or that simple tricks can guarantee visibility. In reality, modern search systems are complex, AI-driven, and constantly evolving.

Believing myths leads to poor decisions, wasted effort, and unstable rankings. By clearing up these misunderstandings, you can focus on sustainable SEO strategies that align with how search engines truly crawl, index, and rank content.

Do Search Engines Use “200 Ranking Factors”?

No, search engines do not use a fixed list of exactly 200 ranking factors. This number became popular years ago but oversimplifies how ranking systems actually work.

Modern search systems use hundreds of signals processed through multiple AI-driven ranking systems. These signals interact dynamically rather than functioning as isolated checklist items.

In Search Engine Fundamentals, ranking is not about optimizing 200 separate boxes. It is about aligning with broader systems that evaluate relevance, authority, quality, and user satisfaction collectively.

Is SEO Just About Keywords?

No, SEO is not just about keywords. While keywords help search engines understand topics, modern ranking systems prioritize intent, context, and quality over repetition.

Search engines now analyze semantic relationships, entities, user behavior, and overall content depth. Keyword stuffing or exact-match obsession no longer works effectively.

In Search Engine Fundamentals, keywords are entry signals, not ranking guarantees. Clear structure, topic coverage, and helpful information matter far more than repeating phrases multiple times.

Does Submitting a Sitemap Guarantee Indexing?

No, submitting a sitemap does not guarantee indexing. A sitemap helps search engines discover pages, but it does not ensure they will be stored in the index.

Search engines still evaluate quality, duplication, technical health, and content value before indexing. Thin or low-value pages may be ignored even if listed in a sitemap.

In Search Engine Fundamentals, sitemaps improve discovery, not approval. Indexing eligibility depends on overall content quality and compliance with search guidelines.

Can You Trick Search Engines Easily?

No, you cannot easily trick modern search engines. AI-based systems detect manipulation tactics much more effectively than in the past.

Techniques like keyword stuffing, link schemes, or hidden text may lead to filtering or ranking drops. Spam detection systems continuously evolve to neutralize artificial tactics.

In Search Engine Fundamentals, sustainable ranking comes from quality content, technical clarity, and genuine authority. Shortcuts may provide temporary gains, but long-term success depends on aligning with search engine systems rather than trying to manipulate them.

Why Do Search Engine Fundamentals Matter for SEO?

Search Engine Fundamentals matter for SEO because they explain how visibility is earned, not guessed. When you understand how crawling, indexing, ranking, and query interpretation work, your optimization decisions become strategic instead of random.

SEO success is not about tricks or shortcuts. It is about aligning your website with how search systems function. If you ignore the fundamentals, you risk creating content that search engines cannot discover, understand, or trust.

In today’s AI-driven landscape, Search Engine Fundamentals are even more important. Search engines now evaluate meaning, authority, and usefulness at a deeper level. Businesses that understand these systems build sustainable visibility and long-term organic growth.

How Do Fundamentals Shape Technical SEO?

Search Engine Fundamentals shape technical SEO by defining how pages must be structured for crawling and indexing. Technical SEO ensures that bots can access, render, and process your content efficiently.

Crawlability, clean URL structure, internal linking, mobile optimization, and structured data all stem directly from fundamental search processes. If search engines cannot crawl or render your site correctly, ranking becomes impossible.

In practical terms, technical SEO is the implementation of Search Engine Fundamentals at the infrastructure level. A technically strong website removes barriers and allows ranking systems to evaluate content fairly and accurately.

How Do Fundamentals Impact Content Strategy?

Search Engine Fundamentals impact content strategy by focusing content creation around intent, relevance, and authority. Content must align with how search engines interpret and retrieve information.

Understanding indexing and retrieval helps you structure content clearly with defined topics and entities. Understanding ranking helps you prioritize depth, expertise, and user satisfaction.

Instead of creating random articles, businesses can build topic clusters, strengthen internal linking, and establish topical authority. Search Engine Fundamentals turn content strategy into a structured system rather than scattered publishing.

Why Is Understanding Search Systems Important for AI Search?

Understanding search systems is important for AI search because AI relies on structured, high-quality indexed content. Generative search tools pull information from reliable web sources.

If your content is unclear, poorly structured, or lacks authority signals, it may not be referenced or surfaced in AI-driven responses. Entity clarity, structured data, and semantic depth increase visibility opportunities.

In modern Search Engine Fundamentals, AI does not replace indexing and ranking it builds on them. Businesses that align content with these systems position themselves for visibility in both traditional and AI-enhanced search environments.

How Can Businesses Use Search Fundamentals Strategically?

Businesses can use Search Engine Fundamentals strategically by building SEO systems aligned with crawlability, authority, and intent satisfaction. This turns search into a predictable growth channel.

Strategic actions include:

- Structuring content around topic clusters

- Strengthening internal and external linking

- Improving technical crawl efficiency

- Publishing authoritative, experience-based content

Instead of chasing trends, businesses that understand fundamentals build durable organic traffic. When SEO decisions are guided by system-level understanding, rankings become more stable and scalable over time.

What is a search engine?

A search engine is a software system that finds, organizes, and displays information from the Internet in response to a user’s query. It crawls web pages, builds an index of content, and uses algorithms to rank the most relevant results for each search.

What are the main stages of how search engines work?

Search engines work in three main stages: Crawling – discovering web pages using automated bots called crawlers. Indexing – analyzing and storing page information in a searchable index. Ranking – ordering results based on relevance and quality for a user query.

What does crawling mean in search engines?

Crawling is the process where search engine bots (also called spiders) systematically visit web pages to find new or updated content, following links from one page to another to build a list of URLs to index.

Why is indexing important in search engines?

Indexing lets search engines store and organize crawled content in a massive database so they can quickly match pages to user queries when a search is performed. Pages not indexed won’t appear in search results.

How do search engines decide the order of results?

Search engines use ranking algorithms that consider hundreds of factors including relevance, quality, content context, and links to order pages so that the most useful results appear first for a given search. Algorithms like PageRank pioneered link-based ranking.

What is PageRank in a search engine?

PageRank is one of the earliest search ranking algorithms used by Google. It evaluates the importance of a page based on the number and quality of links pointing to it: pages with more high-quality backlinks tend to rank higher in search results.